The email is dead. Long live the voice call.

For decades, cybersecurity professionals have trained employees to scrutinize subject lines, hover over links, and check for misspelled domains. We built our defenses around the assumption that the attacker would come through text. But while we were busy securing the inbox, the attackers moved to the phone lines.

In February 2024, a finance worker at a multinational firm in Hong Kong1 attended a video conference call with the company’s Chief Financial Officer and several other staff members. They discussed a confidential transaction, and the CFO ordered a transfer of $25 million (HK$200 million) to various bank accounts. The worker had initial doubts, but they vanished the moment he saw his colleagues’ faces and heard their familiar voices on the call.

There was just one problem: he was the only human on the line. Everyone else, the CFO, the colleagues, the ambient noise, was a deepfake generated by artificial intelligence.

This is the new face of “Vishing” (Voice Phishing). It is no longer a grainy, robotic robocall claiming your car warranty has expired. It is a high-fidelity, real-time cloning of the people you trust most. As we enter 2026, the question is no longer “Can we detect a fake?” but “Can we trust our own ears?”

The Technology of Deception

The barrier to entry for high-quality voice cloning has collapsed. Three years ago, an attacker needed hours of high-quality audio to train a model. Today, they need three seconds of audio, often scraped from a TikTok video, a LinkedIn podcast, or a keynote speech on YouTube.

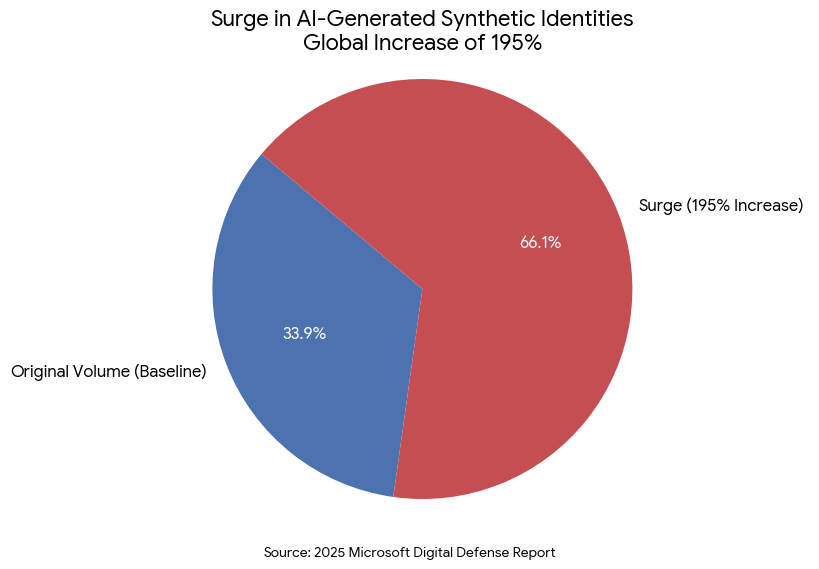

The statistics are alarming. According to the 2025 Microsoft Digital Defense Report,2 the use of AI-generated synthetic identities to bypass identity verification mechanisms has surged by 195% globally. The report highlights that these sophisticated forgeries are successfully defeating “liveness” tests, checks requiring users to blink or move, enabling fraudsters to automate account creation and penetrate standard Know Your Customer (KYC) defenses at scale.

This technological leap has created a crisis of trust. In the past, voice was the ultimate verifier. If you received a suspicious email from the CEO, you would “call him to check.” Now, that phone call itself is the weapon.

Why Our Brains Fall for It

The danger of AI vishing is not just technological; it is biological. Human beings are evolutionarily wired to trust voice. We rely on tone, cadence, and inflection to gauge sincerity. When an AI perfectly mimics the stress in a boss’s voice or the casual informality of a coworker, it bypasses our logical defenses and triggers an emotional response.

This psychological vulnerability renders traditional training obsolete. Telling employees to “be suspicious of unknown numbers” is useless when the caller ID is spoofed, and the voice is recognizable.

To combat this, we must rethink education. As detailed in Emutare’s guide to Security Awareness Program Design: Beyond Compliance,3 effective training must move beyond passive video watching. It requires behavioral conditioning. Organizations need to simulate vishing attacks that use voice clones (with consent) to show employees just how convincing the technology has become. The goal is to break the automatic trust response and replace it with a “verify first” reflex, even when the caller sounds like family.

The Verification Void

If we can’t trust the voice, and we can’t trust the caller ID, what can we trust?

The answer lies in “Out-of-Band” verification, but implementing this requires a significant shift in corporate culture. The finance worker in Hong Kong lost $25 million because he lacked a secondary channel to verify the request. He was trapped inside the compromised medium (the video call).

This is where technical process meets security culture. A robust defense requires integrating verification steps directly into the workflow. We can look to the principles in Integration of Vulnerability Management with DevOps4 for inspiration. Just as we use automated pipelines to verify code before it reaches production, we need “human pipelines” to verify high-value requests. This might mean that any transfer over $10,000 requires a digital signature on a secure internal platform, regardless of what the “CEO” on the phone says. The process itself becomes the security control, removing the reliance on human sensory perception. Microsoft’s guidance on BEC: What is business email compromise (BEC)?5 emphasizes that while technical solutions like Defender can detect spoofing, the “process” controls are equally vital. They advise establishing “strict verification procedures for payment changes and wire transfers” that sit alongside technical defenses. This validates the “process meets security culture” approach, where the workflow rules (the pipeline) override the social engineering attempt.

The Biometric Privacy Paradox

The rise of voice cloning also triggers a massive privacy crisis. Many banks and secure facilities use voice biometrics (“My voice is my password”) as an authenticator. If an attacker can clone a voice perfectly, those biometric locks are broken.

This creates a complex challenge for privacy architects. We are moving into an era where our biological traits, our faces and voices, are public data that can be weaponized against us.

To navigate this, organizations must adopt a Privacy by Design: Implementation Framework for Modern Organizations.6 We must design systems that do not rely on static biometric markers alone. “Liveness detection” (technology that determines if a voice is coming from a human larynx or a speaker) must be embedded into the architecture of every voice-based access point. Furthermore, we must minimize the collection of voice data. If you are recording calls for “quality assurance,” you are building a database that hackers can use to train their cloning models. Privacy by Design dictates that we should retain this data only as long as necessary and encrypt it heavily to prevent it from becoming training data for a criminal’s AI.

Practical Defenses for Leaders

The “Vishing” epidemic is terrifying, but it is manageable. Here are three actionable steps business leaders can take immediately:

1. Establish “Safe Words”

It sounds primitive, but it works. High-risk teams (C-suite, Finance, HR) should agree on a rotating verbal passphrase that is never written down electronically. If the “CEO” calls and asks for a wire transfer but cannot produce the phrase, hang up.

2. Death to the “Urgent” Request

Deepfakes thrive on urgency. The attackers will always claim they are “about to board a plane” or “in a meeting.” Policy should dictate that financial urgency triggers an automatic pause. Slowing down the process is the most effective way to break the spell of the deepfake.

3. Implement Multi-Factor Challenge

Never rely on a single channel. If the request comes via video, verify via encrypted chat (Signal/Teams). If it comes via phone, verify via email. The attacker is rarely in control of all channels simultaneously.

Conclusion

The $25 million Hong Kong heist was not an anomaly; it was a prologue. As AI tools become cheaper and faster, we will see these attacks move down the food chain, targeting not just Fortune 500 CFOs but small business owners and HR managers.

We are entering a “Zero Trust” era for human communication. This does not mean we should become paranoid; it means we must become procedural. By combining empathetic Security Awareness training, rigorous Vulnerability Management of our processes, and resilient Privacy by Design architecture, we can build an organization that verifies truth rather than blindly accepting it.

Your CFO’s voice may be cloneable, but your security culture does not have to be. Emutare empowers your organization to detect deception through comprehensive Cybersecurity Awareness Training that moves beyond passive learning to behavioral conditioning. Our Cybersecurity Advisory and Risk Assessment services help you implement the robust verification frameworks and Privacy by Design architectures needed to defeat deepfakes. Don’t let AI-driven threats breach your defenses. Strengthen your human firewall and secure your operational integrity with Emutare’s expert guidance today.

References

- World Economic Forum. (2025). ‘This Happens More Frequently Than People Realize’: Arup Chief On The Lessons Learned From A $25m Deepfake Crime. https://www.weforum.org/stories/2025/02/deepfake-ai-cybercrime-arup/ ↩︎

- Microsoft. (2025). Microsoft Digital Defense Report 2025. https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/microsoft/msc/documents/presentations/CSR/Microsoft-Digital-Defense-Report-2025.pdf ↩︎

- Emutare. (2025). Security Awareness Program Design: Beyond Compliance. https://insights.emutare.com/security-awareness-program-design-beyond-compliance/ ↩︎

- Emutare. (2025). Integration of Vulnerability Management with DevOps. https://insights.emutare.com/integration-of-vulnerability-management-with-devops/ ↩︎

- Microsoft. What is business email compromise (BEC)?. https://www.microsoft.com/en-us/security/business/security-101/what-is-business-email-compromise-bec ↩︎

- Emutare. (2025). Privacy by Design: Implementation Framework for Modern Organizations. https://insights.emutare.com/privacy-by-design-implementation-framework-for-modern-organizations/ ↩︎

Related Blog Posts

- Certificate-Based Authentication for Users and Devices: A Comprehensive Security Strategy

- IoT Security Challenges in Enterprise Environments

- Future of IoT Security: Regulations and Technologies

- Risk-Based Authentication: Adaptive Security

- IoT Threat Modeling and Risk Assessment: Securing the Connected Ecosystem

- Red Team vs. Blue Team vs. Purple Team Exercises: Strengthening Your Organization’s Security Posture

- AI Security: Protecting Machine Learning Systems