In the cinematic version of cybersecurity, the “insider threat” is almost always a dramatic figure. They are the disgruntled former employee stealing trade secrets at midnight, or the corporate spy planting malware on a server farm. These narratives make for excellent thrillers, but they create a dangerous blind spot for business leaders.

The reality of the insider threat landscape is far more mundane, and paradoxically, far more dangerous.

Most data breaches do not start with a malicious vendetta. They start with an overworked marketing manager who bypasses a complex VPN to upload a large file to a personal Google Drive because the deadline is in an hour. They start with a developer who hard-codes an API key into a public repository because they simply forgot to scrub it.

This is the crucial distinction between the Malicious Insider and the Negligent Insider. Treating them as the same problem is a strategic failure. If you treat a negligent employee like a criminal, you destroy culture. If you treat a malicious employee like a stumbling user, you lose data.

To build a truly resilient organization, we must dissect the anatomy of these two distinct threats and engineer our systems to handle both.

The Scale of the “Accidental” Threat

It is easy to assume that our employees are our greatest defense, but the data suggests they are often our most significant vulnerability. However, this is rarely due to ill intent.

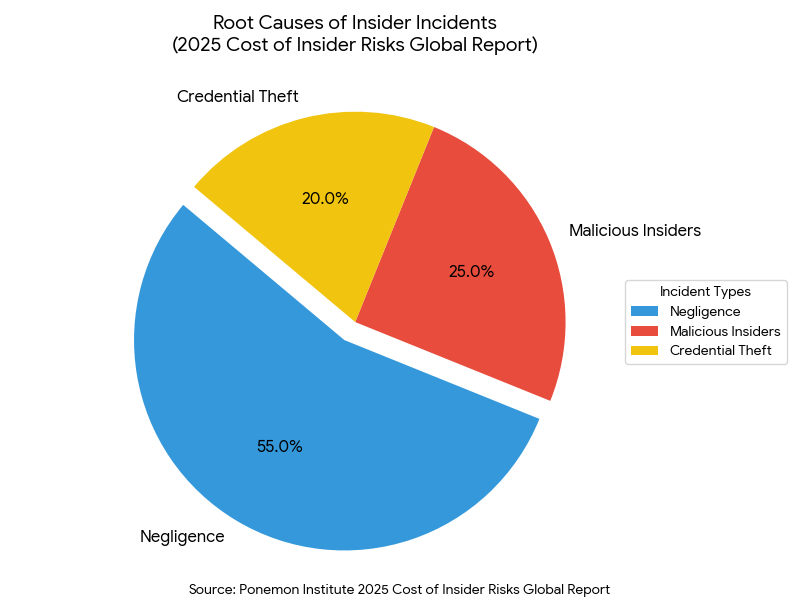

According to the 2025 Cost of Insider Risks Global Report1 by the Ponemon Institute, a staggering 55% of all insider incidents are caused by negligence rather than malicious intent. These are not bad people; they are often your hardest workers trying to navigate friction-filled security policies to get their jobs done.

When we look at the financial impact, the numbers are equally sobering. While malicious attacks often grab headlines, the cumulative cost of negligence is massive due to its frequency. Organizations that fail to distinguish between these two categories often waste millions on “monitoring” tools that look for spies, while completely missing the “clumsy” behaviors that actually cause the breaches.

This brings us to the first pillar of defense: Education that actually works. A generic annual training video does not help an employee distinguish between a safe workaround and a dangerous policy violation. As discussed in Emutare’s guide to Security Awareness Program Design: Beyond Compliance,2 modern training must move beyond checking a compliance box. It must focus on behavioral modification. We need to identify high-risk roles (like Finance or R&D) and tailor the training to the specific accidental errors they are likely to make, rather than feeding them generic warnings about spies.

Anatomy of a Negligent Insider

Why do good employees do bad things to security? The answer is almost always “friction.”

In many organizations, security controls are designed by engineers, for engineers. They do not account for the workflow of a salesperson or a graphic designer. When a security policy makes a task impossible (for example, blocking all USB drives when a user needs to transfer a presentation to a client), the employee will find a workaround. They will email the file to their personal account.

This is “Shadow IT,” and it is the hallmark of the negligent insider.

A study published in the South African Journal of Information Management (2025) titled “A model on workarounds and information security integrity“3 highlights that employees characterize security bypasses as creative problem-solving rather than malicious violations. They prioritize their own judgment over organizational policy to maintain workflow efficiency.

To solve this, we must shift our thinking from “policing users” to “enabling users.” This is where the concept of Privacy by Design becomes critical. Instead of blaming the user for using a non-compliant tool, we should ask why the compliant tool was insufficient. As outlined in the Privacy by Design: Implementation Framework for Modern Organizations,4 we must embed privacy and security controls into the architecture itself so that the “secure way” is also the “easiest way.” If the system is designed correctly, a user shouldn’t be able to accidentally upload sensitive data to a public cloud bucket. The guardrails should be automatic.

Anatomy of a Malicious Insider

While less frequent, the malicious insider is far harder to detect because they often use legitimate credentials to do their dirty work. They aren’t “breaking in”; they have a key.

The malicious insider is usually motivated by one of three factors:

- Financial Gain: Selling data or credentials.

- Espionage: Stealing IP for a competitor or nation-state.2

- Revenge: Sabotaging systems due to a perceived grievance (layoffs, missed promotions).

Unlike the negligent user who makes a mistake and then continues working, the malicious actor displays patterns of “abnormal” behavior. They might access files they haven’t touched in years, download massive datasets at 3 AM, or suddenly disable logging software on their machine.

This is why technical vigilance is non-negotiable. You cannot rely on trust alone. A robust strategy for the Integration of Vulnerability Management with DevOps5 is essential here. By continuously scanning your environment and code repositories, you can detect when an insider (malicious or otherwise) introduces a vulnerability or creates a “backdoor.” In a DevOps environment, a malicious developer might try to inject a vulnerability into the code to exploit later. Automated vulnerability scanning acts as a non-biased auditor, catching these issues before they reach production.

The “Just Culture”: Why Psychology Matters

One of the most critical errors organizations make is punishing negligence too harshly.

Imagine an employee accidentally clicks a phishing link. If they know they will be fired or publicly shamed, they will hide the mistake. They will close the browser, delete the email, and pray nothing happens. This gives the attacker days or weeks to move laterally through the network undetected.

Conversely, in a “Just Culture” (a concept borrowed from aviation safety), errors are treated as learning opportunities. If an employee reports their own mistake immediately, they are thanked, not punished.

Industry evidence supports this approach. The 2025 Verizon Data Breach Investigations Report6 shows that organizations investing in security awareness and training achieved a fourfold increase in employee reporting of phishing emails. Why? Because consistent education and a supportive reporting environment encouraged users to flag suspicious activity immediately, improving early detection and accelerating incident response rather than allowing threats to go unreported.

However, this leniency cannot extend to malice. The distinction must be clear:

- Negligence = Training + System Adjustment (Fix the process).

- Malice = Revocation of Access + Legal Action (Remove the threat).

Implementation Guidance: A Dual-Track Strategy

So, how do business leaders and IT professionals implement a strategy that handles both? We recommend a dual-track approach.

Track 1: Reducing Negligence (The “Safety Net” Approach)

- Conduct a “Friction Audit”: Survey your employees to find out which security policies slow them down the most. These are the areas where negligence is currently happening. If your password policy requires a change every 30 days, users are likely writing passwords on Post-it notes. That is a system failure, not a user failure.

- Automate Data Handling: Do not rely on users to classify data as “Confidential.” Use tools that automatically tag and encrypt data based on content. If a user tries to email a file labeled “Internal Only” to a Gmail address, the system should block it and display a helpful coaching tip, not just a cryptic error message.

- Gamify Awareness: Referencing the Security Awareness Program Design: Beyond Compliance article, move away from fear-based training. Use simulations that reward users for catching phishers. Make security a badge of honor, not a chore.

Track 2: Detecting Malice (The “Zero Trust” Approach)

- Implement UEBA (User and Entity Behavior Analytics): Standard antivirus doesn’t catch insiders.7 UEBA tools establish a “baseline” of normal behavior for every employee.8 If Bob from Accounting usually accesses 50 files a day, and suddenly accesses 5,000, an alert is triggered.

- Enforce Least Privilege: A malicious insider can only steal what they can access. Most breaches spiral out of control because employees have “standing access” to data they don’t need. Review permissions quarterly.

- Code Governance: As highlighted in Integration of Vulnerability Management with DevOps, ensure that no single developer can push code to production without a secondary review (pull request approval). This prevents a disgruntled engineer from planting logic bombs.

The Role of Privacy Architecture

It is important to note that protecting against insiders is also a privacy obligation. Under regulations like GDPR and CCPA, you are responsible for data misuse regardless of whether the cause was a hacker or a clumsy employee.

Adopting a framework like Privacy by Design: Implementation Framework for Modern Organizations helps mitigate insider risk by minimizing the data pool itself. If you don’t collect the data, an insider can’t steal it. If the data is pseudonymized by default, a negligent export of a database renders the file useless to an attacker. Privacy engineering is, in effect, insider threat mitigation.

The Future: AI and the Insider

Looking forward, the line between negligence and malice may blur further with the rise of AI.

Consider an employee who pastes proprietary source code into a public Large Language Model (like ChatGPT) to ask for debugging help. Is this malice? No. Is it negligence? Yes. Does it result in a data leak? Absolutely.

The 2025 Verizon Data Breach Investigations Report (DBIR) notes a rising trend in “non-malicious data mishandling” involving AI tools. This confirms that our policies must evolve faster than our tools. We cannot simply ban AI; we must provide secure, private instances of these tools so that the “easy way” is also the “secure way.”

Conclusion

The “Insider Threat” is not a single monolith.9 It is a spectrum of human behavior ranging from the tired parent making a click error to the calculated spy seeking profit.

For too long, the industry has tried to solve a human problem with purely technical tools, or conversely, tried to solve a technical problem by blaming users. The path forward requires nuance. We must extend grace to the negligent, offering them better tools and “safety net” systems that catch them when they fall. Simultaneously, we must maintain rigorous, “Zero Trust” vigilance to identify and stop the truly malicious few.

By combining empathetic Security Awareness, architectural Privacy by Design, and rigorous Vulnerability Management, organizations can build an immune system that is resilient enough to handle both mistakes and attacks.

Don’t let insider threats or negligent slips compromise your data. Emutare transforms your security culture with Cybersecurity Awareness Training that moves beyond compliance to truly equip staff against evolving risks. We help you build resilient systems through expert Cybersecurity Governance and Consultation, reducing friction for honest employees while detecting malicious actors with advanced SIEM and XDR deployment. From comprehensive risk assessments to proactive incident response planning, we provide the dual-track strategy necessary to handle both human error and calculated attacks. Secure your organization against every spectrum of insider risk with Emutare.

References

- Ponemon Institute. (2025). Cost of Insider Risks Global Report. https://www.dtexsystems.com/newsroom/press-releases/2025-ponemon-insider-threat-report-release/ ↩︎

- Emutare. (2025). Security Awareness Program Design: Beyond Compliance. https://insights.emutare.com/security-awareness-program-design-beyond-compliance/ ↩︎

- Njenga, K., Nyamandi, N. F., & Segooa, M. A. (2024). A model on workarounds and information security integrity. South African Journal of Information Management, 26(1), a1853 https://sajim.co.za/index.php/sajim/article/view/1853/2915 ↩︎

- Emutare. (2025). Privacy by Design: Implementation Framework for Modern Organizations. https://insights.emutare.com/privacy-by-design-implementation-framework-for-modern-organizations/ ↩︎

- Emutare. (2025). Integration of Vulnerability Management with DevOps. https://insights.emutare.com/integration-of-vulnerability-management-with-devops/ ↩︎

- Verizon. (2025) Data Breach Investigations Report. https://www.verizon.com/business/resources/Tbb1/reports/2025-dbir-data-breach-investigations-report.pdf ↩︎

Related Blog Posts

- Infrastructure as Code Security Testing: Securing the Foundation of Modern IT

- Measuring DevSecOps Success: Metrics and KPIs

- Secure CI/CD Pipelines: Design and Implementation

- Certificate-Based Authentication for Users and Devices: A Comprehensive Security Strategy

- IoT Security Challenges in Enterprise Environments

- Future of IoT Security: Regulations and Technologies

- Risk-Based Authentication: Adaptive Security