We have all been there. You sign up for a subscription service in seconds, with a single click, a seamless “Apple Pay” confirmation, and you are in. Three months later, you decide to cancel. Suddenly, the seamless highway becomes a labyrinth. The “Cancel” button is buried three levels deep in a “Settings” menu under a tab vaguely labeled “Account Management.” When you finally find it, you are forced to answer a survey. Then, you are presented with a “Confirm” screen where the “Keep My Subscription” button is bright blue, and the “Cancel” text is a microscopic grey link.

This is not a bad design. It is a hostile design.

In the industry, we call these Dark Patterns. They are user interface (UI) choices meticulously crafted to trick, coerce, or manipulate users into making decisions that benefit the business at the expense of the user’s autonomy.1 For over a decade, these tactics were celebrated as “Growth Hacking”, clever psychological nudges to boost conversion rates and reduce churn.

But in 2026, the wind has shifted. Regulators across the globe, from the European Union to the US Federal Trade Commission (FTC), have declared war on manipulative design. What was once a clever marketing tactic is now a legal liability. More importantly, it has become a silent killer of the most valuable currency in the digital economy: trust.

The Anatomy of Manipulation

To combat dark patterns, we must first recognize them. They are rarely accidental; they are built on a sophisticated understanding of human cognitive biases

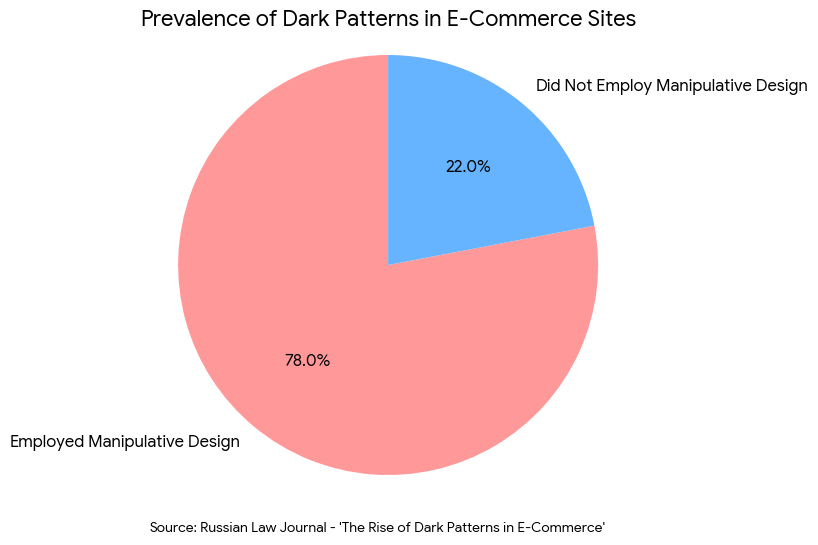

Recent scholarship underscores just how pervasive these tactics have become. In a significant study titled “The Rise of Dark Patterns in E-Commerce,”1 published in the Russian Law Journal, researchers found that approximately 78% of e-commerce sites investigated employed at least one form of manipulative design, such as hidden charges or forced continuity.

This staggering saturation is not accidental; it is a calculated response to the tactic’s undeniable efficacy. Research confirms that while these designs are deceptive, they are highly effective at driving metrics. A landmark experiment by Luguri and Strahilevitz (2021) titled “Shining a Light on Dark Patterns“2 demonstrated that aggressive dark patterns made users “almost four times as likely to subscribe” compared to a control group, albeit at the cost of significant consumer anger.

Common examples include:

- The Roach Motel: Easy to get in, impossible to get out. (e.g., You can sign up online, but must call a phone number between 9 AM and 5 PM to cancel).

- Privacy Zuckering: Named after Mark Zuckerberg, this involves tricking users into sharing more private information than they intended, often through confusing “default” settings.

- Confirmshaming: Manipulating the user’s emotions to steer their choice. (e.g., To decline a newsletter, you must click a link that says, “No thanks, I prefer to lose money.”)

- Forced Continuity: When a free trial silently converts into a paid subscription without adequate warning or an easy way to opt out.

These patterns exploit the gap between “fast thinking” (intuitive, automatic) and “slow thinking” (deliberate, logical). By adding friction to the choices the business dislikes (cancellation) and removing friction from the choices they prefer (subscription), companies effectively hijack the user’s will.

The Regulatory Backlash: From “Unfair” to “Illegal”

For years, dark patterns existed in a legal grey area. That era is over.

Under Article 25 of the Digital Services Act (DSA),3 the European Union explicitly bans interfaces designed to “deceive or manipulate” users. The mandate is simple and non-negotiable: terminating a service must be as frictionless as signing up for it. While the legislation technically came into force in 2024, 2025 marks the shift to full enforcement velocity. Platforms are now legally compelled to dismantle dark patterns and remove the obstacles between you and the “unsubscribe” button, or face massive regulatory consequences.

In the United States, the FTC has been equally aggressive. The agency’s high-profile lawsuits against major tech giants (including Amazon for its Prime cancellation process and Adobe for its hidden termination fees) signal a new enforcement standard. Even though the specific “Click-to-Cancel” rule faced judicial hurdles in mid-2025, the FTC continues to prosecute these designs under Section 5 of the FTC Act, labeling them as “unfair and deceptive practices.”

The message to business leaders is clear: Your UX design is now a compliance artifact. If your interface tricks a user, you are not just risking a bad Yelp review; you are risking a multi-million dollar fine.

The Hidden Cost: Privacy and Consent

The intersection of dark patterns and privacy is perhaps the most dangerous territory for modern organizations.

Under laws like the GDPR and CCPA, user consent must be “freely given, specific, informed, and unambiguous.” If a user clicks “Agree” because the button was misleading, or because the “Reject” button was hidden, that consent is legally void.

This is where the principles of Privacy by Design: Implementation Framework for Modern Organizations4 become critical. Privacy by Design argues that privacy controls must be the default setting, not an option the user has to fight to find. When an organization uses dark patterns to obtain data usage consent (e.g., pre-checked boxes for marketing emails), they are violating the core tenet of the framework.

A true Privacy by Design approach treats the user’s attention with respect. It presents choices neutrally, without visual bias. It assumes that if a user wants to share data, they will do so voluntarily, without being tricked. This shifts the metric of success from “Data Harvested” to “Verified Trust.”

The “Human Firewall” and Design Ethics

We often talk about the “Human Firewall” in the context of preventing cyberattacks. We train employees not to click on phishing links or download suspicious attachments. But there is a cruel irony in training employees to spot external manipulation (phishing) while simultaneously asking our product teams to build internal manipulation (dark patterns) into our own apps.

We are essentially normalizing deception.

A robust Security Awareness Program Design: Beyond Compliance5 should expand its scope to include design ethics. Security awareness isn’t just for the end-user; it is for the developers and designers building the product. Training programs should include modules on “Ethical UI,” teaching product teams how to spot and avoid manipulative patterns.

Tricking a user isn’t just bad design; it is an act of betrayal. As outlined in the paper Dark Patterns as Disloyal Design,6 these tactics represent a distinct form of disloyalty where the firm profits directly from the user’s confusion. The authors contend that once a company rationalizes this exploitation in their product design, they have already crossed the ethical line, making subsequent failures in privacy and security not only likely, but expected.

Auditing the User Interface: A DevOps Approach

How do we practically eliminate dark patterns? We must treat them like software bugs.

In the world of code, we use automated scanners to find vulnerabilities like SQL Injection or Cross-Site Scripting. We need to apply this same rigor to our user experience. This concept aligns with the Integration of Vulnerability Management with DevOps.7

Just as we scan code for security flaws before deployment, we should audit our UI for “autonomy flaws.”

- The “Click Count” Test: Does it take 2 clicks to sign up and 12 clicks to cancel? That is a bug. Fail the build.

- The “Visual Hierarchy” Audit: Is the “Accept” button 50px high and blue, while the “Reject” button is a text link? That is a bug. Fail the build.

- The “Copy” Review: Does the text attempt to shame the user (“I don’t want to save money”)? That is a defect.

By integrating these checks into the Quality Assurance (QA) pipeline, organizations can catch dark patterns before they reach the public. This turns “Ethical Design” from a philosophical debate into a measurable engineering standard.

The Business Case for Radical Transparency

The ultimate argument against dark patterns is not legal; it is financial.

We are entering the age of the “Trust Economy.” In a world flooded with AI-generated spam, deepfakes, and automated scams, human trust is becoming the most scarce and valuable resource.

A study in Behavioural Public Policy (2025), Dark patterns and consumer vulnerability,8 found that while dark patterns increase short-term conversions, they increase long-term churn by nearly double. Users who feel tricked do not just leave; they leave angry. They tell their friends. They post on social media. They actively sabotage the brand.

Conversely, companies that practice “Radical Transparency”, making it laughably easy to cancel, clearly explaining how data is used, and respecting user intent, see a different phenomenon: the “Boomerang Effect.” Users who leave on good terms are 30% more likely to return later than those who feel trapped.

Transparency is a competitive moat. When your competitor is trapping users in a Roach Motel, and you offer a simple “One-Click Goodbye,” you win the reputation war. You signal that your product is good enough to stand on its own merits, without needing a trap door to keep customers inside.

Stop risking regulatory fines and start building trust. Emutare empowers your organization to dismantle manipulative design through expert Cybersecurity Governance and Advisory services. We help you navigate complex regulations to ensure your interfaces remain compliant. Our Vulnerability Management services treat design flaws with the rigor of security bugs, while our specialized Cybersecurity Awareness Training equips your product teams to build ethical UI. Choose Emutare to turn your user experience into a competitive advantage through verified trust and radical transparency.

Conclusion

The era of “Move Fast and Break Things” is over. We are now in the era of “Move Deliberately and Build Trust.”

Dark patterns are a relic of a juvenile digital age, a time when we thought we could outsmart our users with clever buttons and tricky text. But users have evolved. Regulators have caught up. The tactics that built the unicorns of 2015 will destroy the brands of 2026.

For business leaders, the path forward is clear. Audit your digital presence. Strip away the manipulation. Empower your users to leave, and you will find they are much more willing to stay.

References

- Patil, A. A. (2023). The rise of dark patterns in e-commerce: Comparative legal challenges and consumer protection strategies. Russian Law Journal, 11(6), 1296–1321.https://www.russianlawjournal.org/index.php/journal/article/view/4664 ↩︎

- Luguri, J., & Strahilevitz, L. J. (2021). Shining a light on dark patterns. Journal of Legal Analysis, 13(1), 43–109.https://academic.oup.com/jla/article/13/1/43/6180579? ↩︎

- Regulation (EU) 2022/2065 of the European Parliament and of the Council of 19 October 2022 on a Single Market For Digital Services and amending Directive 2000/31/EC (Digital Services Act). (2022). Official Journal of the European Union, L 277, 1–102.https://eur-lex.europa.eu/eli/reg/2022/2065/oj ↩︎

- Emutare. (2025). Privacy by Design: Implementation Framework for Modern Organizations.https://insights.emutare.com/privacy-by-design-implementation-framework-for-modern-organizations/ ↩︎

- Emutare. (2025). Security Awareness Program Design: Beyond Compliance.https://insights.emutare.com/security-awareness-program-design-beyond-compliance/ ↩︎

- Gunawan, J., Hartzog, W., Richards, N., Choffnes, D., & Wilson, C. (2025). Dark patterns as disloyal design. Indiana Law Journal, 100(4), 1389.https://scholarship.law.bu.edu/cgi/viewcontent.cgi?article=5074&context=faculty_scholarship ↩︎

- Emutare. (2025). Integration of Vulnerability Management with DevOps. https://insights.emutare.com/integration-of-vulnerability-management-with-devops/ ↩︎

- Zac, A., Huang, Y.-C., von Moltke, A., Decker, C., & Ezrachi, A. (2025). Dark patterns and consumer vulnerability. Behavioural Public Policy. Advance online publication.https://doi.org/10.1017/bpp.2024.49 ↩︎

Related Blog Posts

- SOC 2 Compliance: Preparation and Audit Process

- Azure Security Best Practices for Australian Businesses: A Comprehensive Guide for 2025

- Tabletop Exercises: Testing Your Incident Response Plan

- BGP Security: Protecting Your Internet Routing

- Data-Centric Security Architecture: Building Resilience Through Data-Focused Protection

- Network Security Zoning and Segmentation Design: Building Resilient Digital Perimeters in 2025

- Threat Intelligence Sharing: Communities and Frameworks