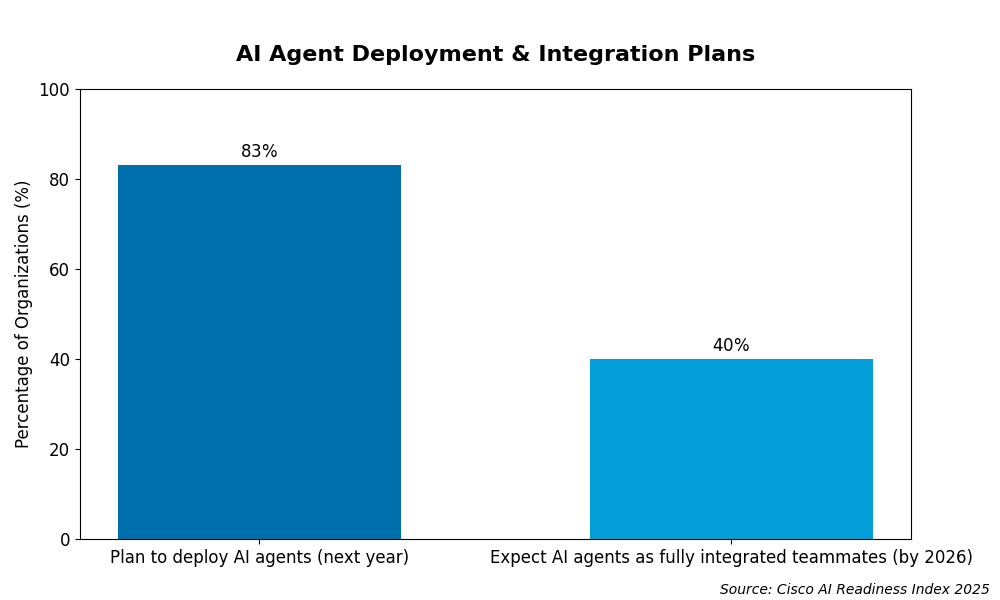

In the software era, we were taught that “software is eating the world.” By 2026, it is more accurate to say that AI is digesting the enterprise. Cisco reports that 83% of organizations plan to deploy AI agents within the next year, marking a massive surge in the transition toward autonomous digital workers. This reflects a rapid evolution from simple chatbots to agents capable of executing complex workflows alongside human employees.

While 40% of companies expect these agents to be fully integrated teammates by the end of 2026, a significant readiness gap remains. Cisco AI Readiness Index 20251 identifies that only 13% of organizations have the infrastructure necessary to scale these systems, warning that ambition is currently outpacing technical preparation.

As these agents take on roles in supply chain orchestration, customer service, and even code generation, they bring with them a unique and often invisible supply chain.

For business leaders and IT professionals, this shift introduces a critical governance gap. While most organizations have finally embraced the Software Bill of Materials (SBOM) to track their code dependencies, these documents are insufficient for the AI era. A traditional SBOM can tell you if a library is vulnerable, but it cannot tell you if the model that library supports was trained on poisoned data or if its decision-making logic is fundamentally biased. This is where the AI Bill of Materials (AI-BOM) becomes the most important tool in your security arsenal.

The Visibility Crisis in the AI Supply Chain

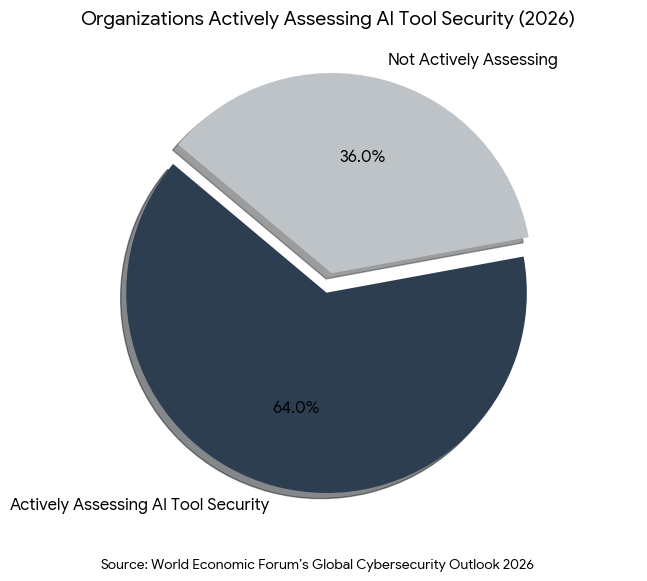

The modern AI system is not a single entity; it is a complex assembly of foundation models, fine-tuning datasets, prompt templates, and third-party orchestration frameworks. According to the World Economic Forum’s Global Cybersecurity Outlook 2026,2 the percentage of organizations actively assessing the security of their AI tools nearly doubled in the last year, from 37% to 64%. This surge in scrutiny is a direct response to the “opacity” of the AI supply chain.

Without an AI-BOM, your AI applications are essentially “black boxes.” You may be using an open-source model that was fine-tuned on data with restrictive licensing, or worse, a model containing “backdoors” intentionally placed by an adversary. As we previously covered in our deep dive into Adversarial Machine Learning,3 the ability of an attacker to compromise a system often begins with a lack of visibility into these underlying “raw materials.”

Why Traditional SBOMs Fall Short

The traditional SBOM was designed for the world of deterministic code. If you have version 2.4.1 of a specific library, its behavior is predictable. AI is non-deterministic; its behavior changes based on the data it consumes and the environment in which it operates.

Treating AI as just another software dependency creates massive security blind spots. An AI-BOM extends the logic of the SBOM to include:

- Model Lineage: Which foundation model was used and what specific versions of fine-tuned weights are in production?

- Dataset Provenance: Where did the training data come from, and has it been verified for poisoning or bias?

- Orchestration Layers: What tools (e.g., LangChain, Semantic Kernel) are managing the flow of data between the user and the model?

In mid 2025, the U.S. Cybersecurity and Infrastructure Security Agency (CISA), alongside federal partners, issued guidance New Best Practices Guide for Securing AI Data Released4 addressing data security risks across the AI system lifecycle. The guidance underscores the AI data supply chain as a primary attack surface, highlighting threats such as data poisoning, compromised third party datasets, and weak controls over data provenance and integrity. While it stops short of formally classifying the data supply chain as a standalone critical infrastructure sector, the message is unambiguous, data used to train and operate AI systems must be governed with the same rigor traditionally applied to software code and software supply chains, particularly in environments supporting critical infrastructure and high impact operations.

Bridging Ethics and Technical Governance

The AI-BOM is not merely a technical checklist; it is a cornerstone of AI Ethics and Security.5 In 2026, “ethics” has moved from a board-room buzzword to a regulatory requirement. Under frameworks like the EU AI Act, companies must be able to demonstrate “lineage” and “explainability” for their high-risk AI systems.

If a customer claims an AI-driven loan approval agent was biased, an AI-BOM allows your legal and IT teams to trace that decision back to the specific training dataset used three months ago. Without this record, your organization is exposed to significant regulatory and reputational risk.

Resolving “Supply Chain” Security Debt

Many organizations have rushed to deploy AI over the last 18 months, often bypassing the rigorous vendor risk assessments they would apply to traditional software. This has led to an accumulation of “Supply Chain Security Debt.” As we noted in our strategic approach to Managing Security Debt,6 debt in the supply chain is particularly toxic because you do not have direct control over the “fix.”

When a vulnerability is discovered in a popular open-source model, organizations with a mature AI-BOM can identify every affected application in minutes. Those without an inventory must manually audit their entire stack, a process that often takes weeks, during which time the organization remains vulnerable to exploitation.

Practical Implementation: Building Your AI-BOM Program

For IT leaders, the transition to an AI-BOM-ready organization should follow the NIST AI Risk Management Framework,7 specifically the “Map” and “Measure” functions.

1. Inventory Your “Shadow AI”

The first step is discovery. Most organizations are using more AI than they realize. Whether it is a marketing team using a browser-based image generator or a developer using an AI coding assistant, these tools must be brought into the light and cataloged in your centralized inventory.

2. Adopt Standardized Formats

Do not reinvent the wheel. Industry standards like SPDX AI and CycloneDX have evolved to support AI-specific fields. By using these machine-readable formats, you ensure that your AI-BOM can be shared with partners and audited by automated tools.

3. Treat the AI-BOM as a “Living” Document

In traditional software, a Bill of Materials is generated at the time of release. For AI, it must be a lifecycle artifact. If you fine-tune a model on new customer data, your AI-BOM must reflect that update immediately. This “continuous inventory” approach allows you to detect “model drift” or “data rot” before it impacts your business operations.

Conclusion: Transparency as a Competitive Advantage

In the 2026 economy, trust is the primary currency. Organizations that can prove their AI is built on clean, ethical, and secure foundations will win the loyalty of both customers and regulators. The AI-BOM is more than just a compliance requirement; it is a roadmap for building resilient, AI-native systems that can withstand the adversarial threats of tomorrow.

By integrating the AI-BOM into your Security Debt management and your Adversarial Defense strategies, you are moving your organization from a posture of “blind trust” to one of “verified resilience.”

In the evolving landscape of 2026, Emutare bridges the critical gap between AI ambition and technical readiness. Our AI Adoption and Technology Consultation empowers your organization to deploy autonomous agents safely by integrating robust Cybersecurity Governance frameworks. We specialize in Cybersecurity Risk Assessments and IT Auditing to help you map complex AI supply chains and maintain the living documentation required for AI-BOM compliance. From identifying shadow AI to securing foundation models, Emutare ensures your AI journey is resilient, ethical, and verified.

References

- Cisco. (2025). Cisco AI Readiness Index 2025. https://www.cisco.com/c/dam/m/en_us/solutions/ai/readiness-index/2025-m10/documents/cisco-ai-readiness-index-2025-realizing-the-value-of-ai.pdf ↩︎

- World Economic Forum. (2026). Global Cybersecurity Outlook 2026. https://www.weforum.org/publications/global-cybersecurity-outlook-2026/in-full/3-the-trends-reshaping-cybersecurity/ ↩︎

- Emutare. (2025). Adversarial Machine Learning: Understanding the Threats. https://insights.emutare.com/adversarial-machine-learning-understanding-the-threats/ ↩︎

- Cybersecurity and Infrastructure Security Agency. (2025). AI data security: Best practices for securing data used to train and operate AI systems. U.S. Department of Homeland Security. https://www.cisa.gov/news-events/alerts/2025/05/22/new-best-practices-guide-securing-ai-data-released? ↩︎

- Emutare. (2025). AI Ethics and Security: Balancing Innovation and Protection. https://insights.emutare.com/ai-ethics-and-security-balancing-innovation-and-protection/ ↩︎

- Emutare. (2025). Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence. https://insights.emutare.com/managing-security-debt-in-software-development-a-strategic-approach-to-long-term-security-excellence/ ↩︎

- NIST. (2025).AI Risk Management Framework (AI RMF) 1.0. https://www.nist.gov/itl/ai-risk-management-framework ↩︎

Related Blog Posts

- Cryptography Basics for IT Security Professionals: A Comprehensive Guide for Modern Cybersecurity

- AI Ethics and Security: Balancing Innovation and Protection

- Legal Considerations for Penetration Testing in Australia

- Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence

- Adversarial Machine Learning: Understanding the Threats

- Selecting the Right Penetration Testing Partner: A Strategic Guide for Australian Organizations

- Digital Signatures: Implementation and Verification