In the landscape of 2026, the most sophisticated cyberattack is no longer a virus designed to crash your server or an encrypted payload aimed at your database. Instead, the most dangerous threat is an attack on the most vulnerable processor in your organization: the human mind. This is the era of “Cognitive Hacking,” a term that describes the use of digital tools to manipulate human perception, sentiment, and decision-making at scale.

While traditional social engineering relies on a simple “phish” or a deceptive phone call, cognitive hacking involves a deep, data-driven understanding of psychology and behavioral science. As we previously explored in our discussion on AI Ethics and Security,1 the ethical guardrails we build for AI must also account for how these technologies can be weaponized to exploit human cognitive biases. In 2026, the line between a “personalized marketing campaign” and a “cognitive hack” has become dangerously thin.

The Anatomy of a Cognitive Hack

Unlike a traditional breach, a cognitive hack does not necessarily require the attacker to steal credentials or bypass a firewall. Instead, the adversary uses AI to influence a target’s worldview. According to the World Economic Forum’s Global Cybersecurity Outlook 2026,2 “misinformation and disinformation” fueled by AI is now ranked as the number one global risk by business leaders, surpassing traditional malware for the first time in history.

The process often begins with “micro-targeting.” Using leaked data or publicly available social media footprints, an attacker builds a psychological profile of an executive or an IT administrator. They then use generative AI to create a series of subtle interactions, emails, synthetic voice notes, or even deepfake video snippets, that reinforce a specific narrative. Over time, these interactions “nudge” the target into making a decision they would otherwise avoid, such as approving an anomalous vendor payment or lowering security protocols during a critical system update.

From Digital Deception to Adversarial Influence

The relationship between cognitive hacking and the technical infrastructure of an organization is intimate. As we have covered in our analysis of Adversarial Machine Learning,3 adversaries are increasingly focused on the integrity of information. In a cognitive hack, the “information” being corrupted is the human perception of reality.

Consider a scenario where an attacker “poisons” the internal sentiment analysis tools used by an HR department or a leadership team. By flooding internal communication channels with bot-generated feedback that mimics the tone and concerns of real employees, an adversary can influence corporate policy or create internal friction. This is not just a prank; it is a strategic disruption intended to weaken the organization’s operational coherence, making it more susceptible to traditional cyberattacks.

The Cost of Cognitive Security Debt

Many organizations are currently suffering from what we might call “Cognitive Security Debt.” This occurs when a company deploys advanced AI communication tools or internal social networks without first training its workforce on the nuances of synthetic media and influence operations. As previously discussed in our guide on Managing Security Debt,4 any shortcut in the “human” side of security architecture eventually becomes a liability.

Cognitive debt manifests when employees are over-reliant on digital signals for trust. In 2026, a “verified” badge or a familiar-sounding voice over a Slack huddle is no longer a guarantee of identity. Organizations that have failed to implement protocol-based authentication, moving from “I recognize your voice” to “I verify your cryptographic token”, are essentially operating on an unsecured psychological network. Per Thomson Reuters’s (2025), as AI usage accelerates, companies need to avoid cognitive debt & decay among their talent,5 cognitive debt accumulates when employees outsource critical thinking and memory to AI, leading to “agency decay.” In 2026, over-reliance on AI-driven social signals creates a workforce that has lost the habit of skepticism. To maintain security, organizations must pivot from passive “social trust” to active “human-in-the-loop” verification, ensuring talent remains the final arbiter of truth rather than a spectator to AI-mediated interactions.

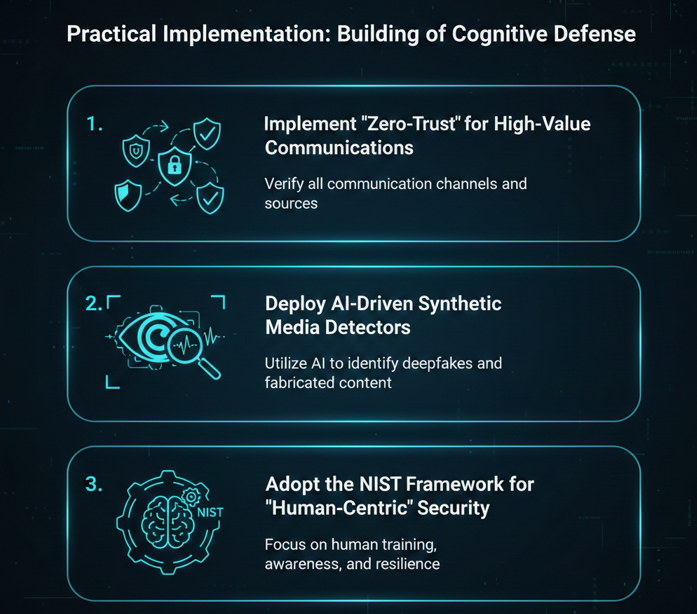

Practical Implementation: Building a Cognitive Defense

For business leaders and IT professionals, defending against cognitive hacking requires a shift from technical barriers to “cognitive resilience.” This involves a multi-layered strategy that treats human psychology as a critical part of the attack surface.

1. Implement “Zero-Trust” for High-Value Communications

Just as we apply zero-trust principles to network architecture, we must apply them to communication. Any request that involves a financial transfer, a change in security policy, or access to sensitive data should require multi-factor authentication (MFA) that is independent of the communication channel.

- Guidance: If an executive receives a “video call” from the CEO requesting an emergency wire transfer, the protocol should mandate a second, out-of-band verification via a physical security key or a pre-arranged verbal code.

2. Deploy AI-Driven Synthetic Media Detectors

As deepfakes become more realistic, the human eye and ear are no longer sufficient tools for verification. Organizations should integrate synthetic media detection tools into their email gateways and communication platforms.

- Guidance: Look for solutions that analyze “biological signatures” in video and audio, such as blood flow patterns in faces or unnatural pauses in speech, which are difficult for current generative models to replicate perfectly.

3. Adopt the NIST Framework for “Human-Centric” Security

The National Institute of Standards and Technology (NIST) has long advocated for a holistic approach to security. In their recent AI Risk Management Framework,6 they emphasize the importance of “interpretability” and “transparency.” For cognitive security, this means ensuring that employees understand why an AI tool is making a suggestion, allowing them to spot “hallucinations” or manipulative “nudges.”

The Psychology of Resilience

Ultimately, the best defense against cognitive hacking is a culture of healthy skepticism. This is not about creating a workplace of paranoia, but rather one of “informed verification.”

A resilient organization uses the OODA loop (Observe, Orient, Decide, Act) at both the machine and human levels. When an employee observes a digital interaction that feels slightly “off,” they should have a clear, non-punitive path to report it. Security teams should treat these reports not as “false positives” but as valuable telemetry on the organization’s cognitive health.

Conclusion: Safeguarding the Human Element

As we move further into the AI-native era, the battle for security will be fought as much in the minds of our employees as in the lines of our code. Cognitive hacking represents a sophisticated, persistent threat that bypasses technical firewalls to exploit human intuition.

By addressing our Security Debt, understanding the evolution of Adversarial Threats, and maintaining an Ethical Framework for our digital tools, we can build a resilient enterprise that is as psychologically sound as it is technically secure.

In 2026, the most secure firewall is an informed, skeptical, and empowered human being.

In 2026, the human mind is your most critical attack surface. To defend against cognitive hacking and “agency decay,” Emutare provides specialized Cybersecurity Awareness Training to sharpen employee skepticism. Our AI Adoption Consultation and AI-Driven Process Automation help your team integrate technology while avoiding cognitive debt. We also offer AI-native security controls and Infrastructure Architecture Development to transition your organization from passive social trust to active, protocol-based verification. Partner with Emutare to build a psychologically resilient enterprise that is secure by design.

References

- Emutare. (2025). AI Ethics and Security: Balancing Innovation and Protection. https://insights.emutare.com/ai-ethics-and-security-balancing-innovation-and-protection/ ↩︎

- World Economic Forum. (2026). Global Cybersecurity Outlook 2026. https://www.weforum.org/publications/global-cybersecurity-outlook-2026/in-full/3-the-trends-reshaping-cybersecurity/ ↩︎

- Emutare. (2025). Adversarial Machine Learning: Understanding the Threats. https://insights.emutare.com/adversarial-machine-learning-understanding-the-threats/ ↩︎

- Emutare. (2025). Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence. https://insights.emutare.com/managing-security-debt-in-software-development-a-strategic-approach-to-long-term-security-excellence/ ↩︎

- Thomson Reuters Institute. (2025). Avoiding cognitive debt & agency decay among talent. Thomson Reuters. https://www.thomsonreuters.com/en-us/posts/sustainability/avoiding-cognitive-debt-agency-decay/ ↩︎

- National Institute of Standards and Technology (NIST). (2025). AI Risk Management Framework (AI RMF). https://www.nist.gov/itl/ai-risk-management-framework ↩︎

Related Blog Posts

- Advanced Anti-Phishing Controls and User Training: Building Resilient Cybersecurity Defenses

- Board Reporting on Cybersecurity: What Executives Need to Know

- Multi-Factor Authentication: Comparing Different Methods

- Secrets Management in DevOps Environments: Securing the Modern Software Development Lifecycle

- Zero Trust for Remote Work: Practical Implementation

- DevSecOps for Cloud: Integrating Security into CI/CD

- Customer Identity and Access Management (CIAM): The Competitive Edge for Australian Businesses