The New Reality of the “Moving” Enterprise

As we navigate the first quarter of 2026, the definition of a “workforce” has fundamentally changed. We have transitioned from the era of static automation, where robots performed repetitive, pre-programmed tasks in caged environments, to the era of the AI-native mobile agent. Today, autonomous mobile robots (AMRs) and automated guided vehicles (AGVs) operate alongside humans in warehouses, hospitals, and shipping hubs. These systems do not just “move”; they perceive, decide, and act.

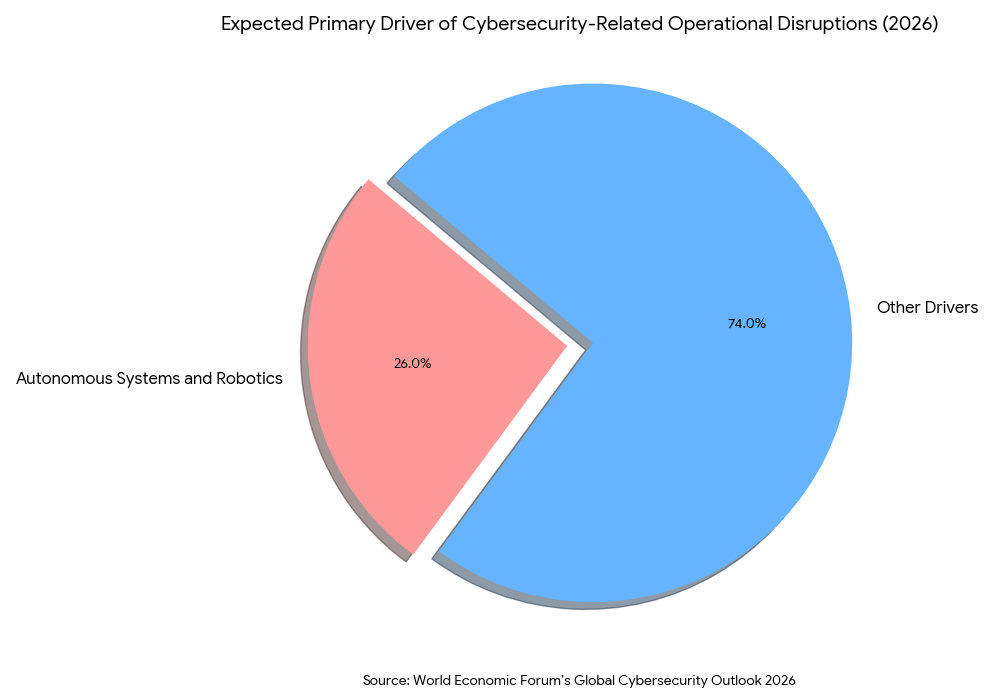

This shift represents a significant leap in productivity, but it also introduces a “kinetic risk” that the cybersecurity industry is only beginning to fully quantify. When an AI system exists solely in the digital realm, a failure results in data loss or service downtime. When an AI system is integrated into a 500-kilogram robotic forklift, a failure can result in structural damage, inventory destruction, or physical injury. According to the World Economic Forum’s Global Cybersecurity Outlook 2026,1 26% of global business leaders now expect autonomous systems and robotics to be the primary driver of cybersecurity-related operational disruptions this year.

The Duality of Autonomous Innovation

For business leaders, the goal is clear: maximize the efficiency of autonomous logistics while ensuring the safety of the operational environment. However, as we previously explored in our deep dive into AI Ethics and Security,2 the speed of innovation often outpaces our ability to build protective guardrails. In the world of physical AI, “ethics” is not just a philosophical debate; it is a safety protocol. If a robot is forced to choose between protecting a piece of expensive equipment or ensuring the safety of a human bystander, the underlying ethical programming becomes a critical security control.

The Evolution of the Threat Landscape: Beyond the Screen

In the past, industrial security was synonymous with “air-gapping” (isolating systems from the internet). In 2026, the air gap is dead. Modern logistics relies on real-time cloud analytics and multi-agent coordination, meaning every robot is an IoT endpoint. This connectivity opens the door to a new class of threats that go beyond traditional malware.

Adversarial Manipulation of Physical Perception

One of the most concerning developments in the current landscape is the rise of perception-based attacks. As we have documented in our research on Adversarial Machine Learning,3 attackers are no longer just trying to steal passwords; they are trying to trick the “senses” of the AI.

In a physical context, this is known as a “Transduction Attack.” By using infrared lasers, projectors, or even subtle acoustic signals, an adversary can “blind” a robot’s Lidar or spoof its camera inputs. For example, a carefully placed sticker on a warehouse floor, invisible to the human eye but interpreted as a “stop” command by an AI, can cause a logistical bottleneck that costs a company millions in delayed shipments. These attacks are particularly insidious because they leave no “footprint” in the traditional sense; the robot is simply performing exactly as its corrupted perception dictates.

The Integrity Crisis in Data Training

The threat is not limited to the “real-time” environment. The long-term security of physical AI depends on the integrity of the data used to train its navigation and task-management models. In its study, Silent Sabotage: Weaponizing AI Models in Exposed Containers4 Trend Micro details how attackers exploit misconfigured container registries (like Docker or Kubernetes) to tamper with AI models before they are even deployed.

In these scenarios, a robot might function perfectly 99% of the time, only to fail or perform a “wrong” action when it encounters a specific, attacker-defined trigger. Imagine a fleet of delivery drones that operate flawlessly until they receive a specific signal, at which point they all enter a “safe landing” mode in an unsecure location. This is the new reality of industrial espionage in 2026.

The Weight of Robotic Security Debt

As organizations rushed to automate during the labor shortages of the early 2020s, many prioritized “uptime” over “security architecture.” This has led to a massive accumulation of what we call “Robotic Security Debt.” As we noted in our strategic guide on Managing Security Debt,5 this debt is particularly dangerous in the OT (Operational Technology) space because industrial hardware has a much longer lifecycle than a typical laptop or server.

The Legacy Problem in a Modern World

A robot purchased in 2022 might still be in operation in 2028, but its underlying operating system, often a lightweight version of Linux or a proprietary Real-Time Operating System (RTOS), may not have seen a security patch in years. This creates a “vulnerability window” that attackers are eager to exploit.

Furthermore, the “Security Debt” in robotics is often compounded by the use of insecure communication protocols. Many industrial robots still use unencrypted protocols like ROS (Robot Operating System) 1.0, which was designed for research environments, not hostile public networks. Transitioning to ROS 2.0 or other secure frameworks is a necessary but expensive step that many firms are delaying, much to their own peril.

Bridging the Gap: The IT/OT Convergence

In 2026, the most successful organizations are those that have dismantled the silos between Information Technology (IT) and Operational Technology (OT). Historically, IT was concerned with data, while OT was concerned with machines. Today, they are one and the same.

Leveraging the NIST Framework for Physical Safety

To manage this convergence, the National Institute of Standards and Technology (NIST) updated its guidance in SP 800-82 Revision 3.6 This document is now the “bible” for securing industrial control systems. It emphasizes a shift from “Confidentiality” to “Safety and Availability.”

For an IT professional, this means rethinking standard security measures. In a traditional office environment, if a computer is suspected of being compromised, the standard response is to isolate it from the network. In an autonomous logistics environment, isolating a robot while it is moving at high speed could cause a catastrophic collision. The “security response” must be as intelligent as the system it is protecting.

Practical Implementation: A Roadmap for the AI-Native Logistics Hub

For business leaders ready to secure their physical AI investments, we recommend a three-pillar approach to implementation.

Pillar 1: Hardware-Rooted Trust

Security must start at the silicon level. Every autonomous agent should be equipped with a Trusted Platform Module (TPM) or a Secure Element that ensures the robot only runs verified, signed firmware.

- Implementation Tip: Audit your current fleet for “Secure Boot” capabilities. If a machine cannot verify its own code at startup, it should be segmented into a highly restricted “legacy” network zone.

Pillar 2: Semantic and Behavioral Guardrails

We must move beyond simple “if-then” logic. Modern robotics requires semantic guardrails, AI-driven “sanity checks” that monitor the robot’s intent.

- Example: If a logistics robot suddenly requests access to the company’s financial server or attempts to move outside its designated geofence, the system should automatically trigger a “soft-stop” and require human re-authentication.

Pillar 3: Continuous Exposure Management (CEM)

In the world of physical AI, a “once-a-year” penetration test is useless. Organizations need a continuous feedback loop.

- Implementation Tip: Use digital twins to simulate attacks. By creating a virtual replica of your warehouse, you can run thousands of “Adversarial Machine Learning” simulations to see how your robots react to sensor spoofing or data poisoning without risking actual hardware.

The Role of Human-Centric Resilience

Despite the focus on automation, the human element remains the most critical component of security. As we previously discussed in our piece on AI Ethics and Security, trust is built on transparency. Workers on the warehouse floor need to understand how the robots “see” the world so they can identify when a machine is behaving erratically.

Training programs should shift from “how to operate the robot” to “how to identify an AI anomaly.” If a robot is stuttering in its movement or ignoring standard pathing, employees should be empowered to hit the physical kill switch without fear of being penalized for “slowing down production.”

Conclusion: Securing the Future of Motion

The integration of AI into the physical world is one of the greatest technological achievements of our decade. It has the potential to eliminate dangerous jobs, streamline global trade, and reduce waste. However, these benefits are only sustainable if we build on a foundation of security and trust.

By addressing our Security Debt today, staying vigilant against Adversarial Threats, and following the rigorous standards set by bodies like NIST, we can ensure that the “moving” enterprise remains a safe and prosperous one.

The kinetic frontier is here. It is no longer enough to secure your data; you must secure your motion.

As autonomous logistics redefine the moving enterprise, Emutare provides the specialized expertise needed to secure your physical AI. We bridge the gap between IT and OT through comprehensive vulnerability management and infrastructure architecture development. Our team assists with AI adoption and technology consultation to ensure your mobile agents operate safely. From implementing cybersecurity controls to industrial regulation compliance, we help you eliminate robotic security debt.

Ready to fortify your autonomous fleet? Contact us for a Cybersecurity Consultation today

References

- World Economic Forum. (2026). Global Cybersecurity Outlook 2026. https://www.weforum.org/publications/global-cybersecurity-outlook-2026/in-full/3-the-trends-reshaping-cybersecurity/ ↩︎

- Emutare. (2025). AI Ethics and Security: Balancing Innovation and Protection. https://insights.emutare.com/ai-ethics-and-security-balancing-innovation-and-protection/ ↩︎

- Emutare. (2025). Adversarial Machine Learning: Understanding the Threats. https://insights.emutare.com/adversarial-machine-learning-understanding-the-threats/ ↩︎

- Trend Micro. (2024). Silent sabotage: Weaponizing AI models in exposed containers. https://www.trendmicro.com/vinfo/us/security/news/cyber-attacks/silent-sabotage-weaponizing-ai-models-in-exposed-containers ↩︎

- Emutare. (2025). Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence. https://insights.emutare.com/managing-security-debt-in-software-development-a-strategic-approach-to-long-term-security-excellence/ ↩︎

- National Institute of Standards and Technology (NIST). (2025). SP 800-82 Rev. 3: Guide to Operational Technology (OT) Security. https://csrc.nist.gov/pubs/sp/800/82/r3/final ↩︎

Related Blog Posts

- IoT Security Challenges in Enterprise Environments

- Future of IoT Security: Regulations and Technologies

- Risk-Based Authentication: Adaptive Security

- IoT Threat Modeling and Risk Assessment: Securing the Connected Ecosystem

- Red Team vs. Blue Team vs. Purple Team Exercises: Strengthening Your Organization’s Security Posture

- AI Security: Protecting Machine Learning Systems

- Common Penetration Testing Findings and Remediations