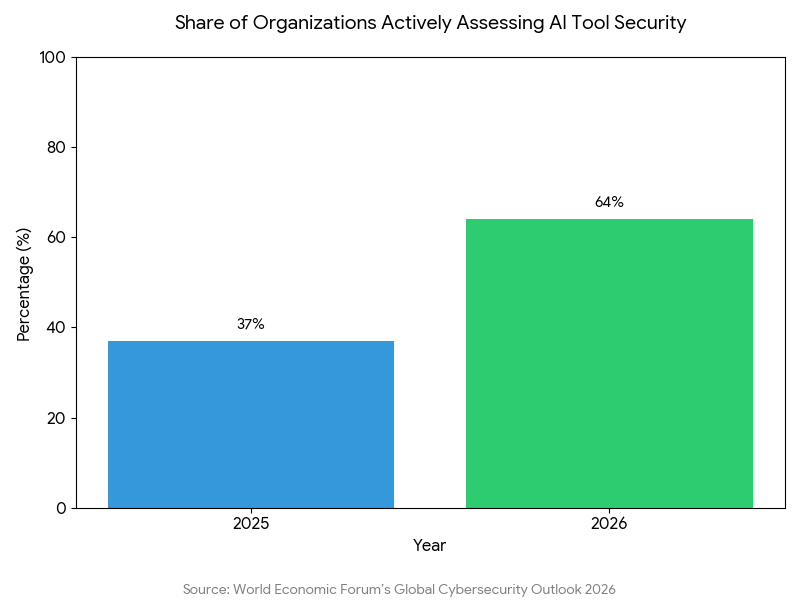

As we move deeper into 2026, the global business landscape has reached a definitive tipping point. We are no longer merely “experimenting” with artificial intelligence; we have entered the era of the AI-native enterprise. Recent data indicates that the share of organizations actively assessing the security of their AI tools has nearly doubled in just twelve months, rising from 37% in 2025 to 64% in 2026, according to the World Economic Forum’s Global Cybersecurity Outlook 2026.1

For business leaders and IT professionals, this shift represents more than just a technological upgrade. It is a fundamental change in the “speed of risk.” When autonomous agents and multi-agent systems begin handling supply chain logistics, financial transactions, and customer interactions, the margin for error disappears. A single misconfigured prompt or a neglected piece of security debt is no longer just a localized bug (it is a potential systemic failure).

The Duality of the AI-Native Era

The central challenge of this year is the “AI-fication” of threats. As we have seen in our previous exploration of AI Ethics and Security,2 balancing rapid innovation with protection requires a framework that prioritizes human accountability even when machines are making split-second decisions. We must recognize that while AI is an incredible force multiplier for defense, it is equally potent in the hands of adversaries.

Cisco research How AI will transform the workplace in 20263 indicates a fundamental shift toward “Connected Intelligence” by 2026. Their forecasts suggest that AI agents will no longer be mere tools but integrated team members. In such an environment, traditional perimeter-based security is obsolete. The “insider threat” is no longer just a disgruntled employee; it can be an autonomous agent that has been subtly manipulated through its training data or real-time inputs.

Resolving the Security Debt Bottleneck

One of the most significant hurdles to achieving true AI-native resilience is the accumulation of “security debt.” Many organizations rushed to integrate AI over the last two years, often bypassing rigorous security protocols to gain a competitive edge. As we previously discussed in our strategic guide on Managing Security Debt,4 this debt acts like a high-interest loan that eventually comes due, often at the most inconvenient time.

The consequences of ignoring this debt are becoming more visible in national statistics. Mandiant’s M-Trends 20255 notes that for the first time since 2010, the global median dwell time (the time an attacker stays in a system before detection) has increased to 11 days. Google attributes this to the increasing complexity of cloud environments, where security debt is harder to visualize and purge. When you layer complex AI models on top of this fragile foundation, you create “hidden” attack paths that are nearly impossible to map using traditional tools.

To navigate this, leaders must move beyond a “find and fix” mindset and adopt a “risk-first” operational model. This involves:

- Audit and Inventory: Identifying where AI is being used (including “Shadow AI” or consumer-facing tools used by staff).

- Prioritization: Using AI-driven analytics to determine which vulnerabilities actually sit on an active attack path.

- Automation of Remediation: Leveraging the very technology that creates the risk to help patch and secure the environment at machine speed.

Confronting Adversarial Evolution

The nature of the attacks themselves has evolved. We are moving past simple phishing into the realm of complex, data-driven manipulation. As we covered in our deep dive into Adversarial Machine Learning,6 attackers are increasingly focused on the integrity of the models themselves.

This is not a theoretical concern. Research published in the Journal of Medical Internet Research (2026)7 highlights that attackers with access to as few as 100 to 500 poisoned data samples can compromise specialized AI systems with a success rate of over 60%. Whether it is a healthcare system or a financial forecasting model, the ability of an adversary to “poison” the well of information means that your AI could be making perfectly logical decisions based on fundamentally corrupted data.

Practical Implementation: A Roadmap for Leaders

So, how does a modern organization secure an AI-native ecosystem without stifling growth? The answer lies in operationalizing governance.

1. Implement “Circuit Breaker” Governance

Just as an electrical circuit breaker prevents a surge from destroying an entire building, AI-native enterprises need “AI Firewalls” at the runtime layer. These tools monitor the inputs and outputs of AI agents in real-time, looking for signs of prompt injection, data leakage, or “hallucinations” that could lead to unauthorized actions.

2. Embrace the NIST AI Risk Management Framework (RMF)

The NIST AI RMF8 has become the gold standard for organizations looking to move from policy to practice. The framework encourages a cycle of “Map, Measure, and Manage.” By 2026, successful organizations will be using this framework to build an “AI Bill of Materials” (AI-BOM), ensuring they know exactly what data, models, and third-party libraries are powering their intelligence.

3. Shift from Alerting to “Attack Path” Simulation

In an environment with millions of telemetry signals, “alert fatigue” is a terminal condition for a security team. Leaders should pivot toward continuous exposure management. This means using AI to simulate how an attacker might move through your network, starting from a minor vulnerability and ending at your most sensitive data. If a piece of security debt does not sit on a viable attack path, it shouldn’t be your top priority.

Conclusion: The Path to Trustworthy Innovation

The 2026 security landscape is undeniably complex, but it is also full of opportunity. By addressing the ethical implications of AI, aggressively managing security debt, and preparing for adversarial tactics, organizations can build a foundation of trust that actually accelerates their digital transformation.

The transition to AI-native resilience is not a one-time project; it is a continuous evolution of culture, technology, and strategy. Those who wait for the “perfectly secure” AI model will be left behind, while those who build robust, adaptive frameworks today will define the markets of tomorrow.

As the era of AI-native enterprise arrives, navigating the “speed of risk” requires more than just tools; it demands strategic resilience. Emutare provides the specialized expertise needed to resolve security debt and secure autonomous environments. Through our AI Adoption and Technology Consultation, we help you operationalize governance and implement robust AI-driven process automation. Whether you need to simulate complex attack paths or deploy advanced SIEM and XDR solutions, Emutare ensures your innovation remains protected. Don’t let your digital transformation be stalled by emerging threats. Partner with Emutare to build a secure, compliant, and adaptive future today.

References

- World Economic Forum. (2026). Global Cybersecurity Outlook 2026. https://www.weforum.org/publications/global-cybersecurity-outlook-2026/in-full/3-the-trends-reshaping-cybersecurity/ ↩︎

- Emutare. (2025). AI Ethics and Security: Balancing Innovation and Protection. https://insights.emutare.com/ai-ethics-and-security-balancing-innovation-and-protection/ ↩︎

- Cisco. (2025). How AI will transform the workplace in 2026. https://newsroom.cisco.com/c/r/newsroom/en/us/a/y2025/m12/how-ai-will-transform-the-workplace-in-2026.html ↩︎

- Emutare. (2025). Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence. https://insights.emutare.com/managing-security-debt-in-software-development-a-strategic-approach-to-long-term-security-excellence/ ↩︎

- Google. (2025). M-Trends 2025 Report. https://services.google.com/fh/files/misc/m-trends-2025-en.pdf ↩︎

- Emutare. (2025). Adversarial Machine Learning: Understanding the Threats. https://insights.emutare.com/adversarial-machine-learning-understanding-the-threats/ ↩︎

- Abtahi, F., Seoane, F., Pau, I., & Vega-Barbas, M. (2026). Data poisoning vulnerabilities across health care artificial intelligence architectures: Analytical security framework and defense strategies. Journal of Medical Internet Research, 28, Article e87969. https://www.jmir.org/2026/1/e87969 ↩︎

- National Institute of Standards and Technology. (2025). NIST AI RMF. https://www.nist.gov/itl/ai-risk-management-framework ↩︎

Related Blog Posts

- APRA CPS 234: Compliance Guide for Financial Institutions

- SOC 2 Compliance: Preparation and Audit Process

- Azure Security Best Practices for Australian Businesses: A Comprehensive Guide for 2025

- Tabletop Exercises: Testing Your Incident Response Plan

- BGP Security: Protecting Your Internet Routing

- Data-Centric Security Architecture: Building Resilience Through Data-Focused Protection

- Network Security Zoning and Segmentation Design: Building Resilient Digital Perimeters in 2025