For the better part of the last decade, the mantra of the digital age was simple: “Data is the new oil.”

Companies hoarded every byte they could capture, customer clickstreams, decade-old transaction logs, and redundant backups of backups, convinced that one day, this data would yield profitable insights. Storage was cheap, the cloud was infinite, and the potential for future analytics seemed boundless.

But in 2025, the narrative has shifted. Data is no longer just an asset; it is a liability.

We are witnessing a fundamental change in how security professionals view information. Every record you store is a record that can be stolen, ransomed, or leaked.1 In an era of aggressive ransomware and privacy litigation, the most secure data is the data you do not have. This is the era of Data Minimization as a Security Strategy.

This article explores why “collecting everything” is a failed strategy and how shifting to a “minimum viable data” model can reduce your attack surface, streamline compliance, and, counter-intuitively, make your forensic investigations significantly more effective.

The Security Case for Deletion

Traditionally, data minimization was viewed as a compliance headache, a box to check for the GDPR or CCPA. However, savvy CISOs now recognize it as a high-impact security control.

Consider the mechanics of a modern breach. Attackers are not just looking for a foothold; they are looking for volume. They want to exfiltrate massive datasets to extort the victim. If your organization is holding onto 10 years of customer data when you only need two years for operational purposes, you are voluntarily tripling your risk exposure. A 2019 peer-reviewed study in Nature Communications, Estimating the success of re-identifications in incomplete datasets using generative models1 dismantled the concept of safe data retention by proving that 99.98% of Americans can be re-identified in any dataset using just 15 demographic attributes. This finding indicates that “anonymized” historical archives remain a high-value target for attackers, as stripping identifiers fails to prevent re-identification. Ultimately, this validates that the only reliable safeguard against data weaponization is deletion rather than de-identification.

Reducing the “Blast Radius”

In cybersecurity, we often talk about the “blast radius” of an attack, how far the damage spreads once a perimeter is breached.

If a threat actor compromises an S3 bucket containing only active, anonymized data for the current fiscal quarter, the blast radius is contained. If that same bucket contains clear-text PII (Personally Identifiable Information) dating back to 2015, the blast radius is catastrophic.

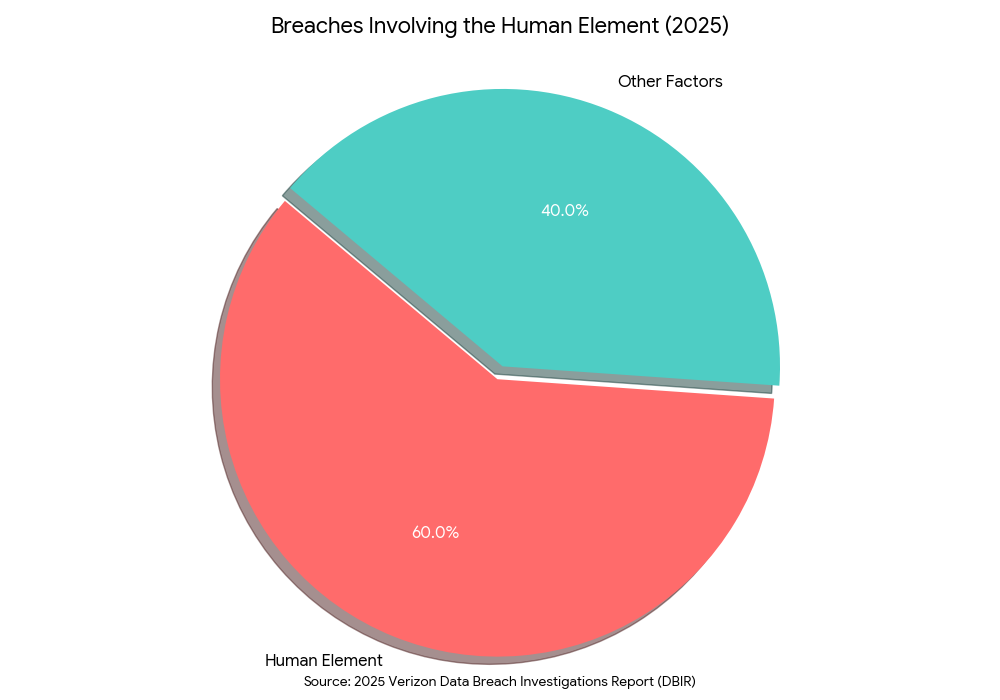

According to the Verizon Data Breach Investigations Report (DBIR),2 despite advancements in security automation, the human element remains the most significant vulnerability in modern cybersecurity. According to the 2025 DBIR, 60% of all breaches involved the human element, stemming from a mix of employee error, credential theft, and social manipulation. In this scenario, the only fail-safe is ensuring that the compromised account has access to as little sensitive data as possible.

The “Toxic Asset” Concept

To implement data minimization effectively, business leaders must reframe how they value information. We must distinguish between “Business Critical Data” and “Toxic Data.”

- Business Critical Data: Information actively used to generate revenue, support operations, or meet legal requirements.

- Toxic Data: Information that has no operational value but carries high liability. This includes duplicate data, orphaned datasets from legacy projects, and PII that has exceeded its retention period.

To learn more about how unmanaged data creates silent risks, read our guide on Shadow Data and Silent Failures: Why Your Cloud Strategy Needs a Forensic Upgrade.3 As we covered there, “shadow data” — unknown and unmanaged data stores, is often the primary source of toxic data accumulation.

The Liability of Hoarding

Toxic data is a dormant threat. It sits on your servers, incurring storage costs and backup overhead, waiting for an incident to turn it into a lawsuit.

The Cisco 2025 Data Privacy Benchmark Study4 refutes the assumption that data governance is a sunken cost, revealing that organizations prioritizing privacy and minimization achieve a 1.6x return on investment. When you view data hygiene as an optional expense, you ignore the consensus of 95% of organizations that report the benefits of privacy investments explicitly outweigh the costs. With 94% of consumers refusing to engage with brands that have poor data protection, failing to minimize your data footprint is a direct threat to revenue.

Practical Implementation: A 4-Step Framework

Moving from a hoarding mindset to a minimization strategy requires more than just a “delete” button. It requires a structured governance framework.

1. Discovery and Inventory

You cannot minimize what you cannot see. The first step is a comprehensive data discovery exercise. This goes beyond structured databases; you must scan unstructured data repositories (SharePoint, Google Drive, S3 buckets) where employees often dump spreadsheets containing PII.

Automated discovery tools are essential here. They can classify data by sensitivity (e.g., “Contains Credit Card Number” or “Contains Social Security Number”) and age.3

2. The “Rotting” Analysis

Once inventoried, analyze your data for ROT: Redundant, Obsolete, and Trivial information.

- Redundant: Do you have five copies of the same marketing database from 2021?

- Obsolete: Are you holding ex-employee records 7 years past their departure, violating local labor laws?

- Trivial: Are you backing up temporary system files or employee MP3 collections?

Eliminating ROT is the “low-hanging fruit” of security. It frees up storage budget and immediately lowers your risk profile without impacting business operations.4

3. Defensible Deletion and Retention Policies

This is where Legal and IT must collaborate. A “Defensible Deletion” strategy means you delete data according to a documented, consistent policy. This protects the organization during litigation; you are not “destroying evidence,” you are “following standard lifecycle management.”

However, deletion isn’t the only option. For data that must be kept for long-term analytics but isn’t needed for daily operations, you should employ Anonymization.

As previously covered in Anonymization vs. Pseudonymization Techniques: A Comprehensive Guide for Modern Data Protection,5 stripping direct identifiers from datasets allows you to retain the utility of the data (for trends and AI training) without retaining the risk. If an attacker steals a fully anonymized dataset, they have stolen statistics, not identities.

4. Automated Lifecycle Management

Manual cleanup is doomed to fail. Implementation involves configuring your cloud platforms to enforce lifecycle rules automatically.

- Example: Configure an AWS S3 Lifecycle Rule to move log files to cold storage after 90 days and permanently delete them after 365 days.

- Example: Set email retention policies in Microsoft 365 to auto-archive mail older than 2 years and hard-delete mail older than 7 years (unless under legal hold).

The Forensic Readiness Paradox

There is a common misconception that data minimization hurts forensic investigations. Security teams worry, “If we delete logs or old data, won’t we lose the evidence we need to investigate a breach?”

The reality is the opposite. Data minimization actually enhances forensic readiness.

In a digital forensic investigation, the biggest enemy is often “noise.” Investigators have to sift through terabytes of irrelevant data to find the few packets or file access logs that matter. When an organization practices strict data minimization, the haystack is smaller, making the needle easier to find.

As detailed in our article on Forensic Readiness: Preparation for Investigations,6 readiness is about having the right data, not the most data. By curating your logs and disposing of irrelevant noise, you allow your incident response team to query data faster and reach conclusions quicker.

Furthermore, if a breach occurs in a minimized environment, the scope of the forensic audit is reduced. Instead of having to review 10 million files to determine which customers to notify, the team may only need to review 100,000 active records. This difference can save weeks of expensive consulting time and millions in regulatory fines.

The Regulatory Push: NIST and Beyond

Data minimization is no longer just a “best practice”; it is becoming a codified standard. The NIST Privacy Framework7 references Data Minimization as a privacy principle enabled by the Data Processing Management and Disassociated Processing categories under the Control function, rather than listing it as a distinct subcategory. It advises organizations to verify that “data processing is limited to what is necessary for the identified purpose.”

Regulatory bodies are losing patience with companies that claim they “accidentally” retained data that was stolen. Under GDPR, storing data beyond its necessary lifespan is, in itself, a violation, even if no breach occurs.

We are also seeing a shift in AI regulation. As companies rush to train Large Language Models (LLMs) on internal data, the risk of “model poisoning” or leaking IP increases. Minimizing the input data to only high-quality, sanitized inputs is not just good security; it results in better, more accurate AI models.

Conclusion: Less really is More

The security strategies of the future will not be measured by how many petabytes of data we can protect, but by how efficiently we can reduce our footprint.

Data minimization is the ultimate “Left of Boom” strategy. It prevents the problem before it exists. By treating data as a potential toxic asset, organizations can reduce their costs, simplify their compliance, and deny attackers the very reward they seek.

Actionable steps for this week:

- Run a “Dark Data” scan on one of your primary cloud storage buckets to identify how much data hasn’t been touched in over 12 months.

- Review your Data Retention Policy — if it says “keep forever,” it needs an immediate update.

- Audit your backups to ensure you aren’t paying to preserve toxic data that you deleted from production years ago.

Transform your data from a liability into a streamlined asset. Emutare supports your move to a “minimum viable data” model through expert Cybersecurity Governance and Advisory services. We assist in developing risk management frameworks that align with strict data regulations. Our Cybersecurity Risk Assessments help locate toxic data risks, while our Incident Response and Disaster Recovery Planning ensures your organization maximizes forensic readiness. Reduce your attack surface and secure your operations today with Emutare’s comprehensive protection strategies.

References

- Rocher, L., Hendrickx, J.M. & de Montjoye, YA. (2019). Estimating the success of re-identifications in incomplete datasets using generative models. Nat Commun 10, 3069. https://www.nature.com/articles/s41467-019-10933-3 ↩︎

- Verizon. (2025). Verizon Data Breach Investigations Report (DBIR). https://www.verizon.com/business/resources/Tbb1/reports/2025-dbir-data-breach-investigations-report.pdf ↩︎

- Emutare. (2025). Shadow Data and Silent Failures: Why Your Cloud Strategy Needs a Forensic Upgrade. https://insights.emutare.com/shadow-data-and-silent-failures-why-your-cloud-strategy-needs-a-forensic-upgrade/ ↩︎

- Cisco. (2025). Cisco 2025 Data Privacy Benchmark Study. https://www.cisco.com/c/en/us/about/trust-center/data-privacy-benchmark-study.html ↩︎

- Emutare. (2025). Anonymization vs. Pseudonymization Techniques: A Comprehensive Guide for Modern Data Protection. https://insights.emutare.com/anonymization-vs-pseudonymization-techniques-a-comprehensive-guide-for-modern-data-protection/ ↩︎

- Emutare. (2025). Forensic Readiness: Preparation for Investigations. https://insights.emutare.com/forensic-readiness-preparation-for-investigations/ ↩︎

- National Institute of Standards and Technology. (2020). NIST Privacy Framework Core. https://www.nist.gov/system/files/documents/2021/05/05/NIST-Privacy-Framework-V1.0-Core-PDF.pdf ↩︎

Related Blog Posts

- PCI DSS: Implementation Guide for Australian Merchants

- Managed Security Services: When to Outsource

- Security Architecture Review Processes: A Comprehensive Guide to Modern Cybersecurity Assessment

- Public Key Infrastructure (PKI) Design and Management: A Comprehensive Guide for Modern Organizations

- APRA CPS 234: Compliance Guide for Financial Institutions

- SOC 2 Compliance: Preparation and Audit Process

- Azure Security Best Practices for Australian Businesses: A Comprehensive Guide for 2025