For decades, the cybersecurity industry has been fighting a two-front war. We have spent billions of dollars building fortifications for “Data at Rest” (encryption on hard drives) and “Data in Transit” (TLS/SSL for network traffic). These battles, while not fully won, are well-understood. We know how to lock the vault, and we know how to drive the armored truck.

But there has always been a third front, a gaping hole in our defenses that most organizations simply accepted as the cost of doing business: Data in Use.

To process data, to query a database, train an AI model, or calculate a financial trend, you traditionally had to decrypt it. You had to take the diamond out of the vault and place it on the table. For those few milliseconds or minutes that the data is being processed in system memory (RAM), it is vulnerable. It is exposed to admin prying, memory-scraping malware, and insider threats.

In 2026, this acceptance of risk is no longer necessary. We are entering the era of Privacy-Preserving Analytics, a suite of technologies that allows companies to compute valuable insights from sensitive data without ever seeing the raw data itself. It sounds like magic, calculating the average salary of a room full of people without anyone revealing their pay slip, but it is actually just advanced mathematics.

This article explores how technologies like Homomorphic Encryption (HE) and Secure Multi-Party Computation (SMPC) are moving from academic theory to the enterprise boardroom, and why your 2026 data strategy is incomplete without them.

The “Holy Grail” of Cryptography

The concept of processing encrypted data was once considered the “Holy Grail” of cryptography — theoretically possible but computationally impossible. However, recent breakthroughs have changed the landscape.

To understand the solution, we must first understand the depth of the problem. As we previously covered in Shadow Data and Silent Failures: Why Your Cloud Strategy Needs a Forensic Upgrade,1 data often leaks not because the storage was insecure, but because the data was moved, copied, and decrypted for legitimate business workflows. A data scientist downloads a production dataset to a dev server to “test a model,” or a marketing analyst pulls raw customer lists to “run a query.” In that moment of use, the data became shadow data, untracked, unencrypted, and exposed.

Privacy-Preserving Analytics solves this by ensuring the data never leaves the encrypted state, even while it is being worked on.

The Glovebox Analogy

The best way to visualize Homomorphic Encryption (HE) is to imagine a high-security bio-lab glovebox.

In this analogy:

- The Data is a dangerous chemical.

- The Encryption is the sealed glass box with thick rubber gloves built into the side.

- The Analyst is the scientist standing outside.

The scientist can put their hands into the gloves and manipulate the chemicals (the data). They can mix them, weigh them, and react to them (perform calculations). However, they never actually touch the chemicals. They never inhale the fumes. The chemicals never leave the secure environment. When the scientist is done, they have a result (the new compound), but the raw materials remain contained the entire time.

In technical terms, HE allows you to perform mathematical operations on ciphertexts (encrypted text) that, when decrypted, match the result of operations performed on the plaintexts.2 If you encrypt the numbers 5 and 10, send them to a cloud server, and ask the server to “add them,” the server adds the encrypted blobs. It sends back a new encrypted blob. When you decrypt that blob using your private key, you get “15.” The server never knew it was adding 5 and 10; it just knew it was processing mathematical structures.

Beyond Anonymization: The “Re-Identification” Threat

For years, the standard approach to privacy was anonymization. Companies would scrub names and social security numbers from datasets and assume they were safe to share.

However, as we detailed in Anonymization vs. Pseudonymization Techniques: A Comprehensive Guide for Modern Data Protection,2 traditional anonymization is increasingly fragile. With enough auxiliary data, social media logs, public voter records, and location metadata, AI models can now “re-identify” users in supposedly anonymous datasets with frightening accuracy.

This is where Privacy-Enhancing Technologies (PETs) offer a superior alternative.

According to a comprehensive report by NIST (National Institute of Standards and Technology) on Privacy-Enhancing Cryptography,3 technologies like HE and SMPC provide “mathematical guarantees” of privacy that statistical anonymization cannot match. NIST highlights that these tools are essential for “collaborative insights,” such as multiple hospitals wanting to train a cancer detection AI on patient records without legally being allowed to share those records with each other.

With HE, Hospital A and Hospital B can encrypt their patient data and send it to a central server. The server trains the AI model on the encrypted data. The model learns to spot cancer patterns, but it never “sees” a single patient X-ray. The privacy is hard-coded into the math, not just a policy agreement.

The Performance Reality Check: Is it Ready?

For a long time, the criticism of Homomorphic Encryption was that it was too slow. In 2015, performing a simple multiplication on encrypted data could be thousands of times slower than on plaintext.

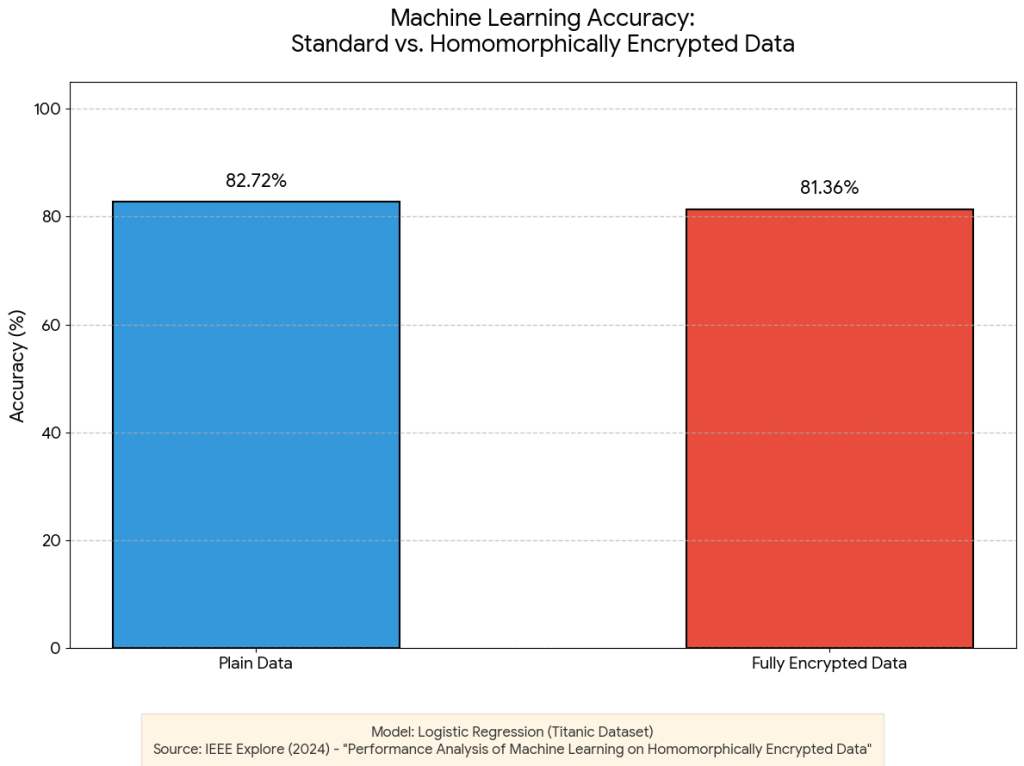

However, recent verified statistics show a dramatic improvement. A 2024 study published in IEEE Explore, titled “Performance Analysis of Machine Learning on Homomorphically Encrypted Data,” 4conducted real-world tests comparing standard machine learning against encrypted machine learning.

The results were eye-opening for skeptics. The researchers found that for a logistic regression model trained on the Titanic dataset, the accuracy on plain data was 82.72%, while the accuracy on fully encrypted data was 81.36%.

This is a critical insight for business leaders. The question is no longer “Does it work?” The question is, “Is a 1.36% drop in accuracy an acceptable trade-off for 100% data privacy?” For a marketing recommendation engine, the answer is almost certainly yes. For a high-frequency trading algorithm where microseconds matter, perhaps not yet. But the gap is closing.

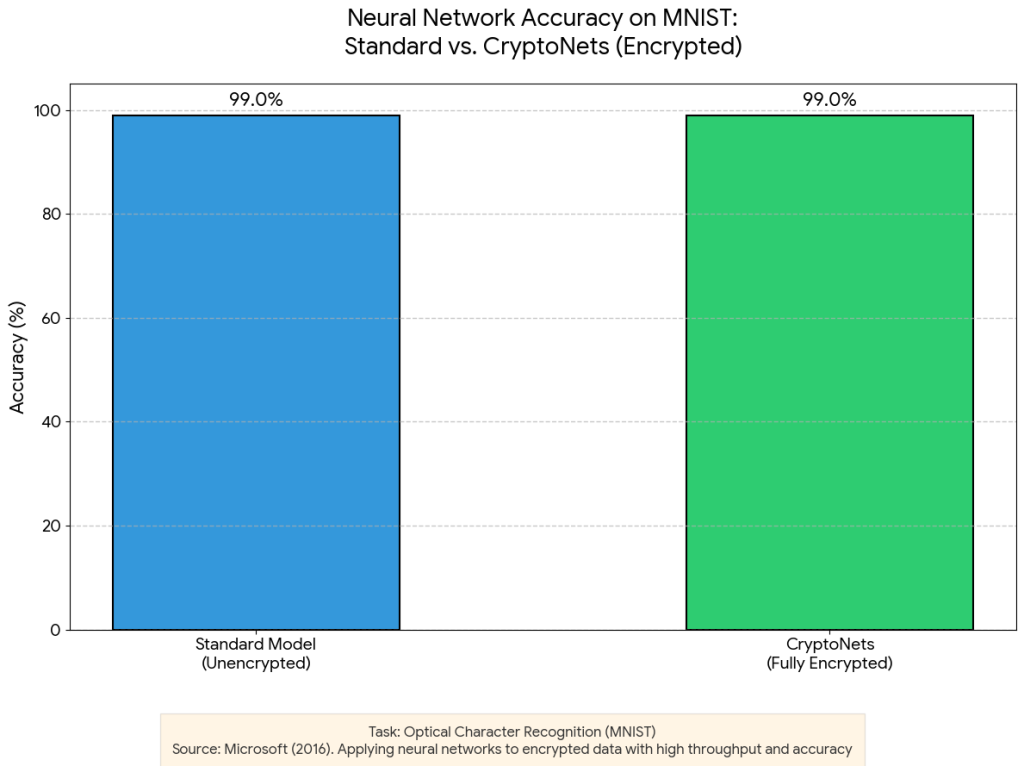

A pivotal study by Microsoft Research CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy 5 (“CryptoNets”), demonstrated that for optical character recognition (MNIST dataset), the accuracy reached 99.0% on fully encrypted data, effectively matching the performance of standard unencrypted models. This indicates that for specific classification tasks, the trade-off between privacy and precision has been eliminated, provided the system is architected to handle the significant increase in latency through high-throughput batching.

The “Zero Trust” Investigation

Adopting these technologies doesn’t just change how you process data; it changes how you protect and investigate it.

In our guide to Forensic Readiness: Preparation for Investigations,6 we emphasized that you cannot investigate what you cannot see. This creates a paradox for privacy-preserving analytics. If the server never sees the data, how does the security team know if the data is being misused?

If a malicious insider tries to exfiltrate data from a Homomorphic Encryption environment, what do they get? They get the ciphertext. They get meaningless noise.

This shifts the forensic focus from content inspection (looking for keywords like “Confidential” in outgoing packets) to behavioral analysis and metadata forensics. You don’t need to see that the user downloaded “Strategy_Doc.pdf”; you need to see that the user queried the encrypted datastore 5,000 times in one minute, an impossible volume for a human user.

Furthermore, we are seeing the rise of Zero-Knowledge Proofs (ZKPs) in forensic auditing. ZKPs allow a system to prove it acted correctly without revealing the data it acted on.5 Imagine an auditor asking, “Did this financial transaction comply with anti-money laundering laws?” The system can generate a mathematical proof that says “Yes, the value was under $10,000 and the sender was not on a sanction list,” without ever revealing the sender’s name or the exact amount to the auditor.

This capability is revolutionary for compliance. It allows for “auditing without exposure.”

The European Standard: ENISA’s Engineering Approach

While the US market is driven by innovation and competitive advantage, the European market is driven by strict regulation. The European Union Agency for Cybersecurity (ENISA) has been vocal about the need for “Data Protection Engineering.”

In their recent reports, Data Protection Engineering,7 ENISA argues that “privacy by design” is no longer just a legal phrase but an engineering requirement. They explicitly list Privacy-Enhancing Technologies (PETs) as the vehicle to achieve this.

ENISA advises that organizations should stop viewing privacy as a “policy layer” (e.g., asking users for consent) and start viewing it as a “technical layer.” If you use Secure Multi-Party Computation (SMPC) to calculate market share between three competitors, you don’t need to trust the competitors not to steal your sales data. The protocol guarantees they cannot see it.

This “trustless” architecture is the future of B2B data sharing. In a world of supply chain attacks and vendor breaches, relying on your partner’s security hygiene is a risk. Relying on mathematics is a strategy.

Strategic Implementation: Where to Start?

Implementing Privacy-Preserving Analytics is not a “rip and replace” project. You do not suddenly encrypt your entire data lake homomorphically. It is a targeted tool for specific high-risk use cases.

Here is a practical roadmap for business leaders:

1. Identify “Toxic” Intersections

Look for business processes where two datasets must meet, but neither owner wants to share the data.

- Example: You want to check if your customers are also customers of a partner firm for a co-marketing campaign, but neither of you wants to share your full customer list.

- Solution: Private Set Intersection (PSI). This is a specific form of computation that reveals only the overlapping users (the intersection) and nothing else. It is lightweight, fast, and available today.

2. The “Crown Jewels” Analytics

Identify your most sensitive data — genomic data, financial projections, biometric templates. This is where the computational cost of Homomorphic Encryption is justified. Move these specific workloads to an encrypted processing pipeline.

3. Vendor Assessment

When evaluating SaaS vendors, ask them about their “Data in Use” protection. Many modern vendors are beginning to offer “Confidential Computing” environments (using hardware enclaves like Intel SGX or AWS Nitro). While not fully homomorphic, these hardware-based approaches offer a similar promise: the cloud provider cannot peek into the memory where your data is being processed.

Conclusion: The End of the “Data Trade-Off”

For too long, we have accepted a false trade-off: “You can have privacy, or you can have utility. Pick one.”

If you wanted to cure cancer using global patient data, you had to sacrifice privacy. If you wanted absolute privacy, you had to lock the data away and learn nothing from it.

Privacy-Preserving Analytics destroys this trade-off. It allows us to have our cake and eat it too. We can derive the utility, the insight, and the profit from data without ever exposing the underlying secrets.

As we move toward 2026, the companies that thrive will not be the ones with the biggest data lakes. They will be the ones that can safely collaborate, safely compute, and safely innovate on data they don’t even need to see.

Turn Privacy-Preserving Analytics into Business Reality

Ready to secure your “Data in Use” without sacrificing utility? Emutare bridges the gap between advanced cryptography and practical implementation. We empower organizations to navigate this new landscape through AI Adoption Consultation, Data Protection Implementation, and robust Cybersecurity Governance. Whether you need to architect a zero-trust infrastructure or ensure compliance with evolving regulations, our Cybersecurity Advisory services provide the expertise you need. Don’t just lock your data away, compute it safely with Emutare.

References

- Emutare. (2025). Shadow Data and Silent Failures: Why Your Cloud Strategy Needs a Forensic Upgrade. https://insights.emutare.com/shadow-data-and-silent-failures-why-your-cloud-strategy-needs-a-forensic-upgrade/ ↩︎

- Emutare. (2025). Anonymization vs. Pseudonymization Techniques: A Comprehensive Guide for Modern Data Protection. https://insights.emutare.com/anonymization-vs-pseudonymization-techniques-a-comprehensive-guide-for-modern-data-protection/ ↩︎

- National Institute of Standards and Technology (NIST). “Privacy-Enhancing Cryptography (PEC) Project.” NIST Computer Security Resource Center. https://csrc.nist.gov/projects/pec ↩︎

- M. F. Mahatho, Y. Chen and S. Zhang, “Performance Analysis of Machine Learning on Homomorphically Encrypted Data,” 2024 16th International Conference on Wireless Communications and Signal Processing (WCSP), Hefei, China, 2024, pp. 691-696, doi: 10.1109/WCSP62071.2024.10826872. https://ieeexplore.ieee.org/document/10826872 ↩︎

- Dowlin, N., Gilad-Bachrach, R., Laine, K., Lauter, K., Naehrig, M., & Wernsing, J. (2016). CryptoNets: Applying neural networks to encrypted data with high throughput and accuracy (MSR-TR-2016-3). Microsoft. https://www.microsoft.com/en-us/research/publication/cryptonets-applying-neural-networks-to-encrypted-data-with-high-throughput-and-accuracy/ ↩︎

- Emutare. (2025). Forensic Readiness: Preparation for Investigations. https://insights.emutare.com/forensic-readiness-preparation-for-investigations/ ↩︎

- European Union Agency for Cybersecurity (ENISA). (2022). Data Protection Engineering. ENISA Publications. https://www.enisa.europa.eu/publications/data-protection-engineering. ↩︎

Related Blog Posts

- Board Reporting on Cybersecurity: What Executives Need to Know

- Multi-Factor Authentication: Comparing Different Methods

- Secrets Management in DevOps Environments: Securing the Modern Software Development Lifecycle

- Zero Trust for Remote Work: Practical Implementation

- DevSecOps for Cloud: Integrating Security into CI/CD

- Customer Identity and Access Management (CIAM): The Competitive Edge for Australian Businesses

- Infrastructure as Code Security Testing: Securing the Foundation of Modern IT