The rapid evolution of generative AI has brought us to a critical inflection point in 2026. We have moved past simple chatbots that merely summarize text to “Agentic AI”, systems that can autonomously navigate databases, execute code, and trigger business workflows. Central to this shift is the Model Context Protocol (MCP), an open standard designed to give AI models a universal way to access external tools and data. While MCP solves the problem of fragmented integrations, it introduces a profound new challenge: the governance of non-human identities (NHIs).

In the Post-Scanning Era, the risk is no longer just about a user typing a “naughty” prompt; it is about an autonomous agent possessing the credentials to exfiltrate data or modify production environments without a human in the loop. Securing MCP requires more than just traditional perimeter defenses. It demands a sophisticated layer of orchestration that treats every AI-to-system interaction as a high-stakes governed event. This article provides a blueprint for business leaders and IT professionals to move beyond the prompt layer and secure the execution layer of their AI ecosystem.

The “Confused Deputy” Problem in MCP

One of the most significant architectural risks in MCP is known as the “Confused Deputy” problem. Because MCP servers act as intermediaries between an AI model and a sensitive resource (like a CRM or a financial database), they often operate with elevated privileges. If a malicious actor can trick an AI agent through a prompt injection, the agent may unwittingly command the MCP server to perform an action it shouldn’t, such as downloading a full customer list, using the server’s legitimate credentials.

As previously covered in Operationalising Trust: Fixing the Broken Feedback Loop in Modern SOCs,1 the lack of granular context in security operations often leads to “blind trust.” In an MCP environment, this blind trust is catastrophic. Industry analysis from Google’s What is the MCP and how does it work?2 (January 2026) confirms that the Model Context Protocol (MCP) creates a high-risk environment for unauthorized data exposure and arbitrary code execution. Because the protocol allows agents to bridge the gap between models and local systems, it can be manipulated by adversarial prompts to act as a “confused deputy” if proper sandboxing is absent.

Google explicitly warns that current MCP implementations often fail to require user consent for tool invocation. To secure these connections, Google mandates the use of identity-based authentication (IAM) and OAuth 2.1 to ensure the downstream resource can verify the legitimacy of a request before execution

The Surge of “Shadow MCP” and Supply Chain Risk

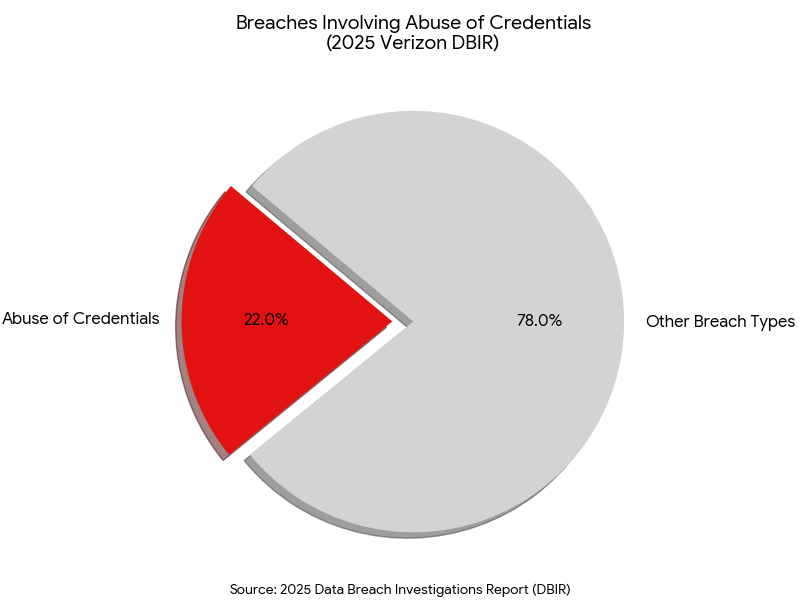

Just as Shadow IT plagued the early 2010s, “Shadow MCP” is the defining governance challenge of 2026. Developers are increasingly using local or unvetted MCP servers from public repositories like GitHub to accelerate AI integration. In the 2025 Data Breach Investigations Report (DBIR),3 Verizon highlights a critical shift in the threat landscape as organizations increasingly deploy automated tools and AI-driven processes. While human credentials remain a primary target, the report notes that 22% of breaches now involve the abuse of credentials, a figure increasingly compounded by the rise of “Shadow AI” and unmanaged machine identities.

Verizon identifies that many of these automated agents are being integrated into enterprise workflows without the same rigorous oversight applied to human users. This lack of lifecycle management often results in persistent, over-privileged access for non-human entities, creating a significant “blind spot” for security teams. The report emphasizes that as these agents become more autonomous, the failure to secure their specific credentials allows attackers to move laterally through a network with minimal detection.

To understand the danger of unmanaged assets, one must look at API Asset Governance: Identifying and Decommissioning Obsolete Endpoints.4 An unmonitored MCP server is effectively a persistent, authenticated backdoor. If an MCP server is left active after a project’s completion, it remains a target for “Tool Poisoning” attacks. In these scenarios, attackers inject malicious instructions into the metadata of the tool, which the AI model interprets as legitimate context, leading to silent system compromise.

Recent peer-reviewed research Progent: Programmable Privilege Control for LLM Agents,5 confirms that AI agents are routinely deployed with far broader access than their tasks require. The Progent study demonstrates that most agentic systems operate with unrestricted or long-lived tool permissions by default, creating persistent over-privilege conditions that significantly expand the attack surface. The research shows that without explicit, programmable privilege boundaries, agents accumulate unnecessary capabilities across workflows, increasing the risk of lateral movement, data exfiltration, and unintended actions. The findings reinforce that over-permissioning is. This is not just a technical oversight; it is a governance failure that expands the attack surface faster than most SOCs can scan it.

Continuous Exposure Management for AI Agents

The complexity of agentic workflows means that “point-in-time” security checks are no longer sufficient. An AI agent’s risk profile changes every time it connects to a new MCP server or accesses a different dataset. This is where Continuous Threat Exposure Management (CTEM) becomes the essential framework for AI safety.

As explored in Stop Patching Everything: The Case for “Continuous Threat Exposure Management” (CTEM),6 the goal is to shift from patching vulnerabilities to managing exposure. For MCP, this means continuously validating the “reachability” of your most sensitive data via AI agents. If an agent can reach your financial ledger through a series of chained MCP tool calls, that exposure must be governed, regardless of whether a traditional CVE exists.

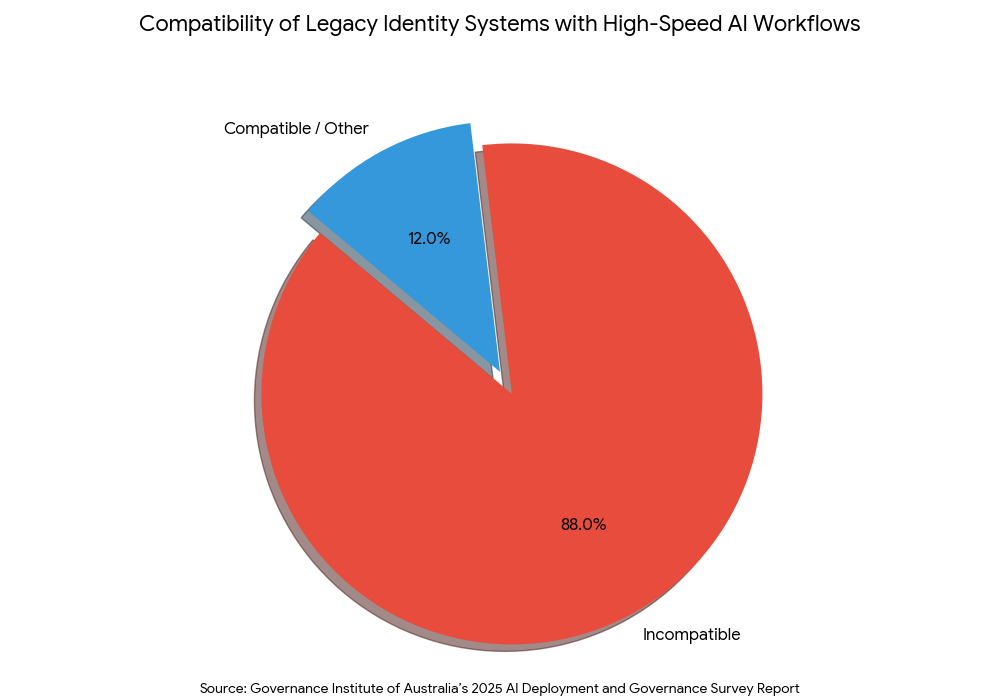

The shift to autonomous AI has exposed a massive infrastructure gap: according to the Governance Institute of Australia’s 2025 AI Deployment and Governance Survey Report,7 88% of organizations find that their legacy identity systems, such as OAuth, are incompatible with high-speed AI workflows. These protocols were built for static human sessions, whereas AI requires dynamic, non-human identity (NHI) governance that can keep pace with machine-speed execution. The study emphasizes that by 2026, the governance of non-human identities will be a top priority for boards, as agents operate at machine speed and scale, often bypassing the friction points that keep human error in check.

Practical Implementation: A 4-Step Governance Framework

To effectively secure MCP and Agentic AI, organizations should adopt the following structured approach:

1. Discovery and Inventory of the “Agentic Supply Chain”

You cannot secure what you do not see. Organizations must implement automated discovery to identify every MCP server and AI client running in their environment.

- Scan for Local Servers: Detect “Shadow MCP” servers running on developer workstations.

- Map Token Usage: Identify which API keys and service accounts are being utilized by AI agents.

2. Fine-Grained Tool Authorization

Move away from “All-or-Nothing” access. Every tool call made via MCP should be subjected to a policy engine.

- Scoped Permissions: If an agent only needs to read a record, its MCP connection should explicitly forbid delete or write operations.

- Intent Analysis: Implement “Adaptive Guardrails” that use a secondary, smaller LLM to inspect the intent of a tool call before it is executed.

3. Human-in-the-Loop for High-Impact Actions

Autonomy should not mean a lack of oversight.

- Confirmation Thresholds: Any action that could result in data deletion, financial transfer, or configuration changes must require explicit human approval via a secure “Confirmation UI.”

- Auditability: Ensure every action taken by an agent is logged in an immutable audit trail, linking the initial user prompt to the final system action.

4. Behavioral Anomaly Detection

Since agents move data at a rate higher than human users, security teams must monitor for behavioral shifts.

- Volume Thresholds: Flag agents that suddenly begin downloading large quantities of data.

- Communication Patterns: Alert on unexpected agent-to-agent communication that could indicate lateral movement.

Secure your agentic future with Emutare. As the Model Context Protocol (MCP) redefines Al integration, we provide the governance needed to prevent “Shadow MCP” and “Confused Deputy” risks. Our AI Adoption and Technology Consultation helps you build secure frameworks for non-human identities. We offer AI-Driven Process Automation and Cybersecurity Governance to ensure your agents operate within strict privilege boundaries. From IT Asset Management to SIEM and XDR Deployment, Emutare delivers the visibility and behavioral detection required for a resilient Al ecosystem.

Conclusion

The Model Context Protocol represents the future of AI interoperability, but it also marks a new era of risk. As we move into 2026, the distinction between “AI security” and “system security” is disappearing. To protect the enterprise, we must treat AI agents as the most powerful, and potentially the most dangerous, non-human identities in our network.

By integrating the principles of CTEM, operationalizing trust through improved feedback loops, and maintaining a rigorous inventory of API and MCP assets, business leaders can harness the power of agentic AI without opening a permanent backdoor to their most critical data. The path forward is not to stifle AI innovation, but to wrap it in a governance layer that is as dynamic and intelligent as the agents themselves.

References

- Emutare. (2025). Operationalizing Trust: Fixing the Broken Feedback Loop in Modern SOCs. https://insights.emutare.com/operationalizing-trust-fixing-the-broken-feedback-loop-in-modern-socs/ ↩︎

- Google. (2025). What is the MCP and how does it work?. https://cloud.google.com/discover/what-is-model-context-protocol ↩︎

- Verizon. (2025). 2025 Data Breach Investigations Report (DBIR). https://www.verizon.com/business/resources/Tbb1/reports/2025-dbir-data-breach-investigations-report.pdf ↩︎

- Emutare. (2025). API Asset Governance: Identifying and Decommissioning Obsolete Endpoints. https://insights.emutare.com/api-asset-governance-identifying-and-decommissioning-obsolete-endpoints/ ↩︎

- Shi, T., He, et al. (2025). Progent: Programmable privilege control for large language model agents. arXiv. https://arxiv.org/abs/2504.11703 ↩︎

- Emutare. (2025). Stop Patching Everything: The Case for “Continuous Threat Exposure Management” (CTEM). https://insights.emutare.com/stop-patching-everything-the-case-for-continuous-threat-exposure-management-ctem/ ↩︎

- Governance Institute of Australia. (2025). 2025 AI Deployment and Governance Survey Report. https://www.governanceinstitute.com.au/app/uploads/2025/04/AI-deployment-and-governance.pdf ↩︎

Related Blog Posts

- Cryptography Basics for IT Security Professionals: A Comprehensive Guide for Modern Cybersecurity

- AI Ethics and Security: Balancing Innovation and Protection

- Legal Considerations for Penetration Testing in Australia

- Managing Security Debt in Software Development: A Strategic Approach to Long-term Security Excellence

- Adversarial Machine Learning: Understanding the Threats

- Selecting the Right Penetration Testing Partner: A Strategic Guide for Australian Organizations

- Digital Signatures: Implementation and Verification